Enterprise

The Ultimate Guide to Enterprise GenAI Tools

Sep 26, 2024

2 min read

The buzz around GenAI is everywhere. 62% of business and technology leaders told Deloitte that they feel ‘excitement’ as their top sentiment for the technology. 46% chose fascination. Nearly 80% of leaders expect GenAI to transform their organization within three years.

These expectations are also seeing changes on ground. McKinsey finds that 65% of organizations are regularly using GenAI in at least one business function. In marketing and sales, the adoption has doubled over the last year. Organizations are spending over 5% of their digital budgets on enterprise GenAI tools and services.

Yet, a vast majority of them are struggling to operationalize GenAI purposefully and securely. Signing up for ChatGPT or integrating CoPilot into the Microsoft stack doesn’t produce the ‘transformation’ enterprises seek.

While there is some increase in productivity, the additional value in terms of growth, innovation or business models is yet to materialize. So, what can they do?

Let’s find out. But first, a quick primer.

What are GenAI tools?

Enterprise generative AI tools are software programs that enable organizations to create text content, design images, produce audio/video, analyze data, etc. For the purposes of this blog post, an enterprise GenAI tool is any of the following.

Large language models (LLMs): These are pre-trained deep learning models capable of understanding natural language input and producing relevant content in return.

Interfaces: Also known as chatbots or assistants, interfaces are the gateway through which one interacts with the LLM. ChatGPT, Google Gemini, Tune Chat are all examples of GenAI interfaces.

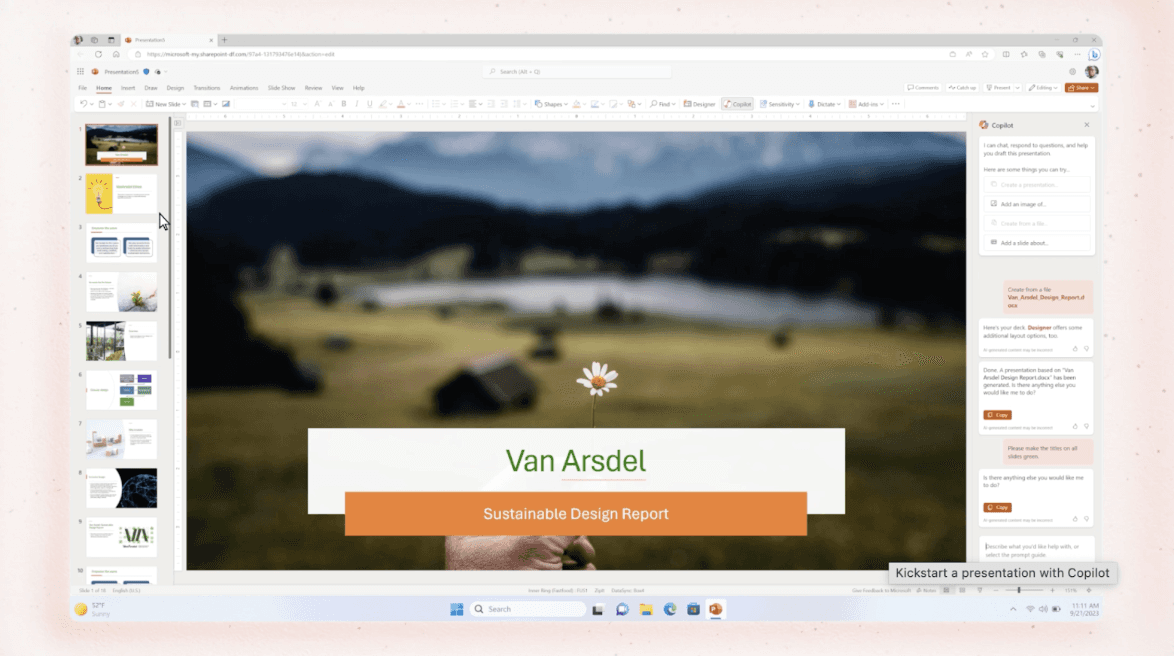

Screenshot of the interface of Microsoft Copilot (Source: Microsoft)

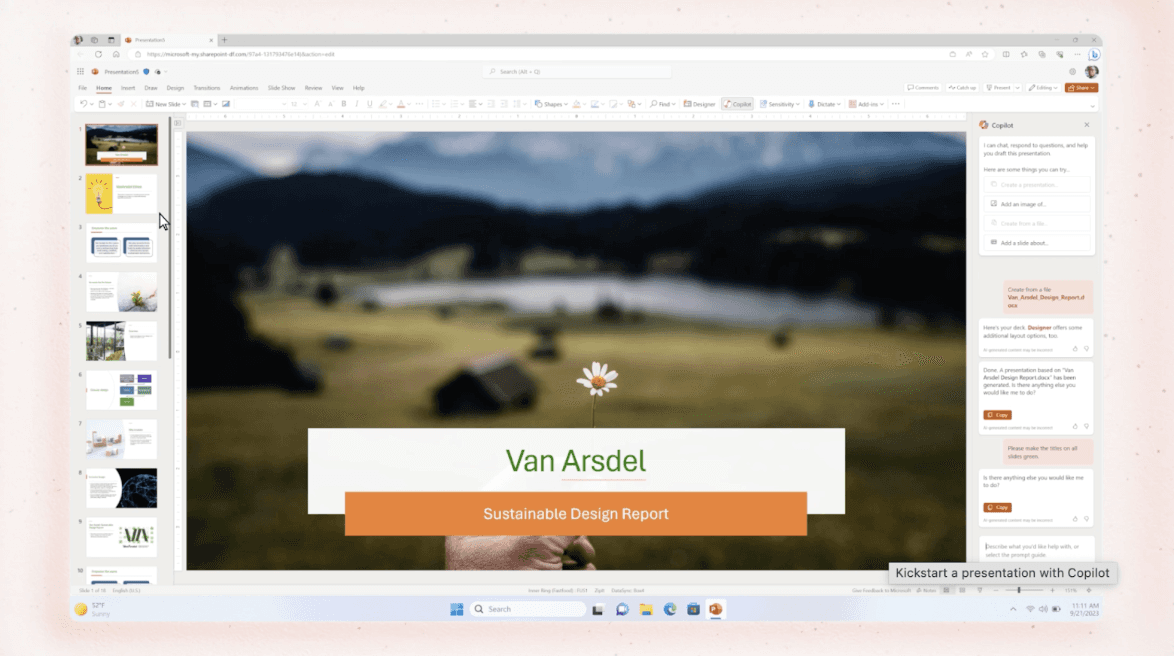

Integrated AI: Most applications today—from WhatsApp to Gmail—come with integrated GenAI tools, often powered by publicly available LLMs.

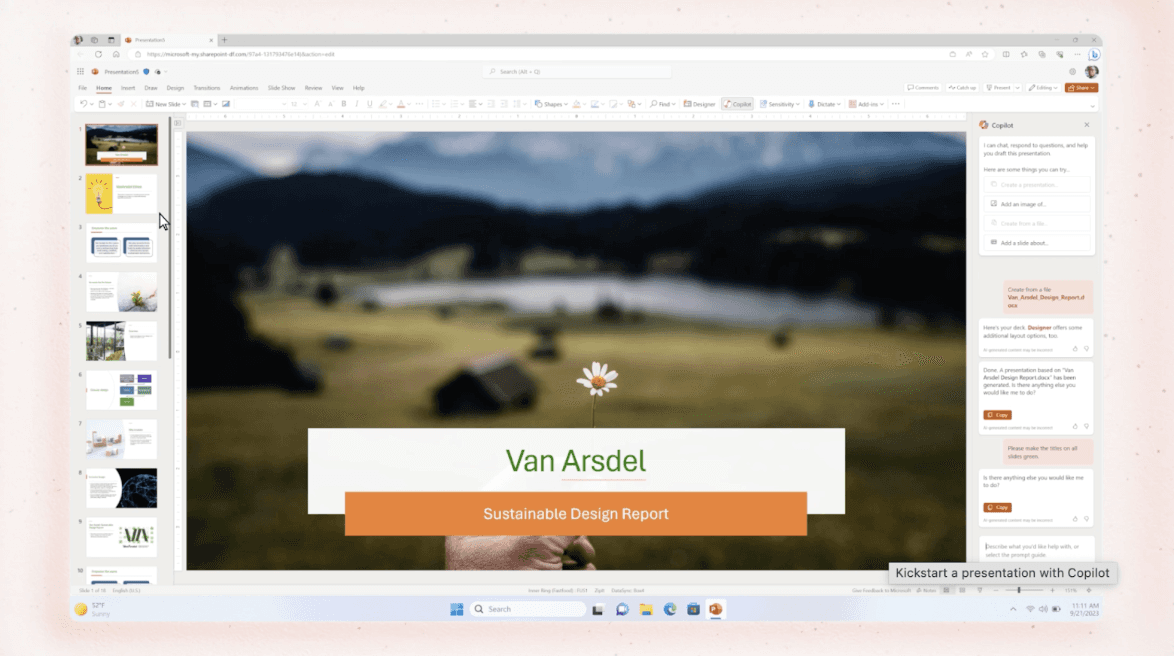

Screenshot of Microsoft Copilot integrated into Powerpoint (Source: Microsoft)

Fine-tuning: For enterprise-level customizations and use case-driven implementations, there are numerous fine-tuning tools, such as the Tune Studio.

Infrastructure: Cloud or on-prem infrastructure for deploying enterprise GenAI, such as NVidia DGX and Amazon AI infrastructure.

We explore the top tools in each of the above categories later in this article. But before that, let’s see why you need any of these tools at all.

Why does an enterprise need tools for GenAI?

As technology evolves, creating an LLM becomes infinitely complex and resource-intensive. For instance, if you get into the build vs. buy discussion for LLMs and decide to build your own, you’ll need billions of dollars, flexible deadlines and super-skilled teams to merely try.

Even if you do succeed, your product might not be competitive enough, compared to the latest tools in the market. Enterprises have long understood this, which is why we see the dramatic growth in buying SaaS tools for non-core requirements. Same goes for GenAI tools.

Today’s leading enterprise GenAI tools are:

State-of-the-art and regularly updated

Innovative and rapidly evolving with the advancements in technology

Simple to implement/integrate, powered by cloud-native tech and APIs

Secure and consistently upgraded for advanced security and privacy requirements

Robust, built for enterprise-grade demands

Easy for non-tech users to embrace and have fun along the way

In essence, using a tool is simpler, easier, cheaper and convenient for experimental projects.

Top enterprise GenAI tools for every need

We’d be hard-pressed to believe that the turning point in generative AI technology—i.e., the launch of ChatGPT—was barely two years ago. Since then, thousands of GenAI-powered products have mushroomed across use cases and needs. Here are the remarkable ones for you to consider.

Large language models

To qualify as large, an AI model needs to have been trained on billions of parameters. The below models are pre-trained on generalized data and ready for use or further specialized training.

GPT-4o

Developed by OpenAI, GPT-4o is a multilingual, multimodal LLM, which accepts input and generates output as both text and images. In addition to having a textual conversation, GPT-4o can describe images, summarize text, understand diagrams etc. It is available through an API for enterprise customizations and fine-tuning.

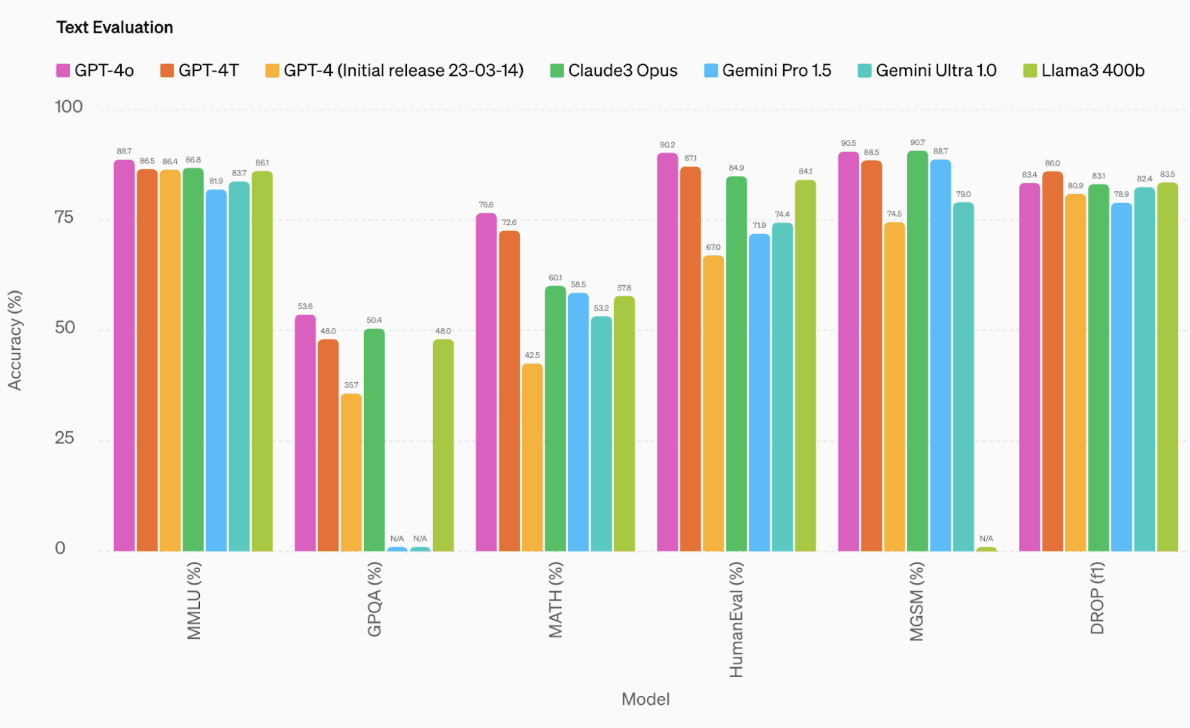

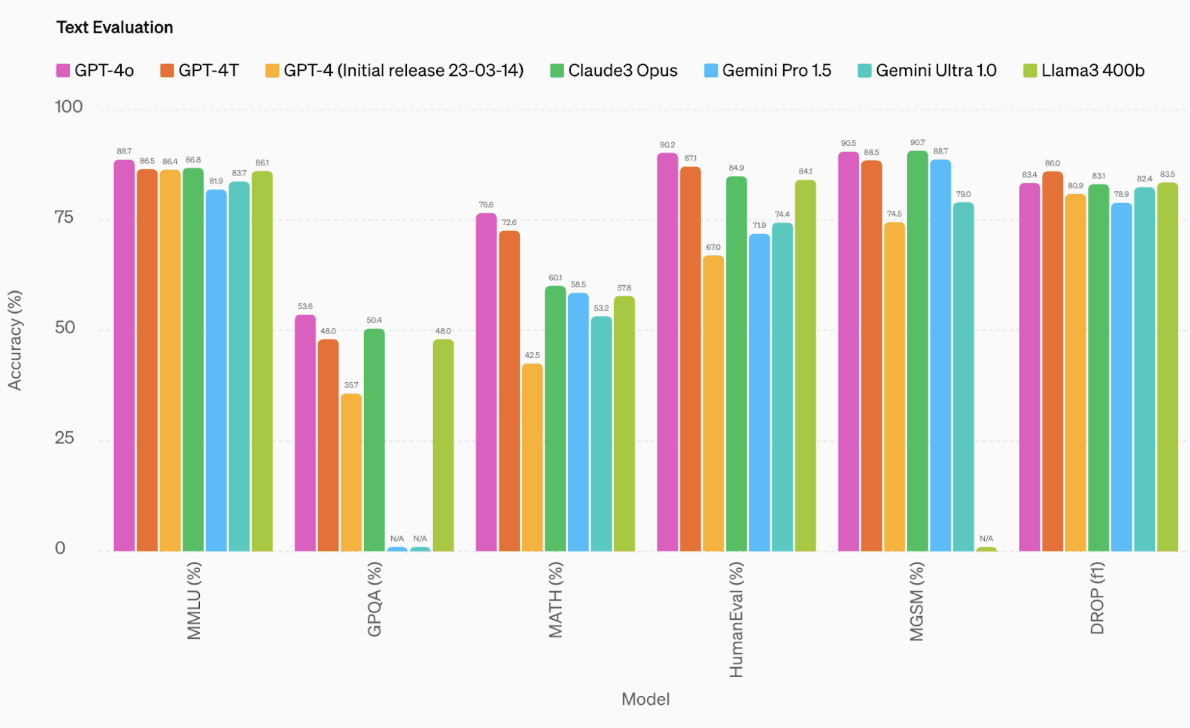

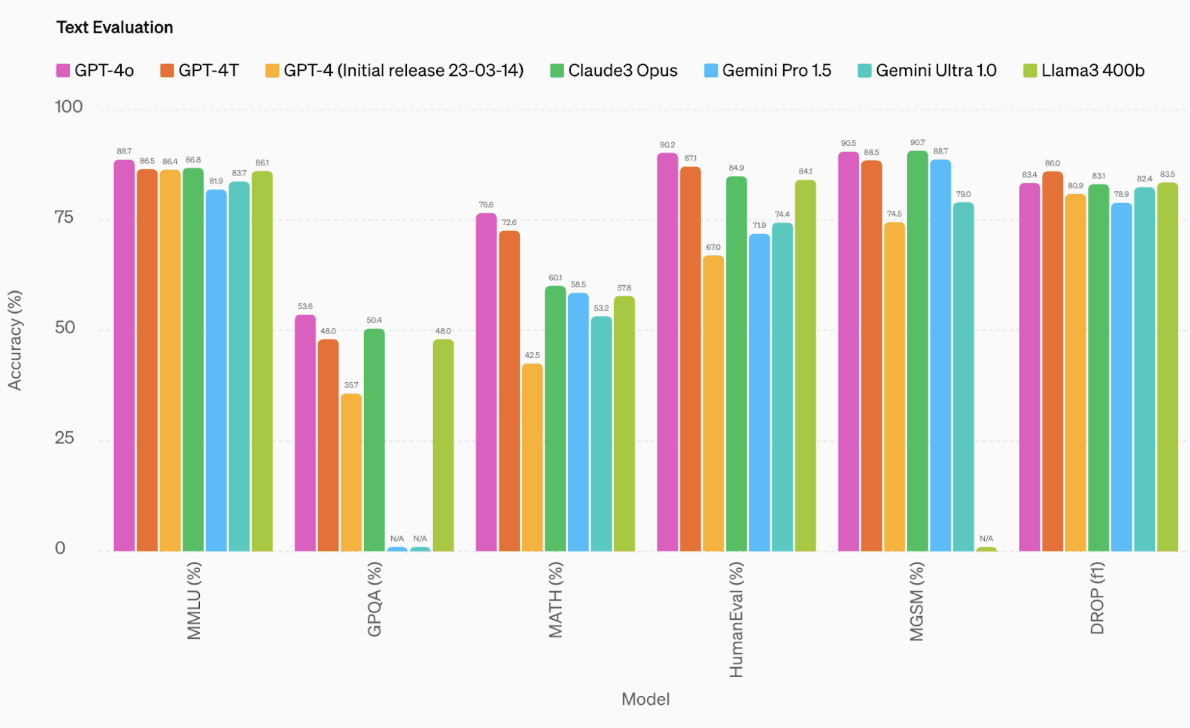

GPT-4o’s performance on text evaluation against other top LLMs (Source: OpenAI)

This model is great for generalized enterprise applications across:

Creating text content in 50 languages

Editing documents, summarizing information, creating action items etc.

Data analysis and creating tables, graphs, diagrams and reports

Code generation or identifying errors during programming

Accepting voice input for various tasks

Gemini

Gemini is Google’s multimodal LLM, which powers the eponymous chatbot. Gemini Ultra is the largest and most capable version available. However, Google also offers Nano, which is better suited for on-device tasks. Google claims that this model outperforms GPT-4 on several fronts.

Gemini is great for applications that need:

Native multimodal capabilities across image, text and audio

Extracting information from images/video or writing descriptions

Programming in languages Python, Java, C++, and Go

Scaling on Google Cloud infrastructure

Llama

Meta’s Large Language Model Meta AI (Llama) 3 is one of the largest foundational models today. The kicker is that it’s open source. Available in 8 billion, 70 billion and 405 billion parameter variations, Llama offers choices for speed, performance, and scalability.

Fundamentally, Llama is a great option for enterprises looking to take the open-source route to GenAI. But that’s not all.

For coding use cases, Llama is best in class, only marginally outperformed by proprietary models

Organizations can run it on their own cloud or on-prem infra, giving complete control and predictability

Llama lends itself well to domain-specific fine-tuning

It is also optimized for tool use, which means you can integrate it into your own tech stack and work on it more effortlessly than proprietary models

Mixtral

Built by the French company Mistral AI, the Mixtral models are also open. Using a ‘mixture of experts’ architecture, this model handles five languages—English, French, Italian, German and Spanish. It is available on an Apache License.

The Mistral suite of models are great for:

Text classification and sentiment analysis

Complex tasks that need expertise across multiple areas

Benchmarking other proprietary models you’re considering

The above is just the tip of the LLM iceberg. Anthropic’s Claude, IBM’s Granite, Baidu’s Ernie, Google’s LaMDA, PaLM, Amazon’s AlexaTM etc. are all popular proprietary models. At the open source end, NVidia’s Nemotron, Databricks’ DBRS, Google’s BERT, X (previously known as Twitter)’s Grok are popular examples.

Domain-specific LLMs

While the largest LLMs today have general knowledge across various industries and domains, enterprises need intelligence in their specific line of work. This is where domain-specific LLMs come in handy. Some of the most popular ones are listed below.

BloombergGPT: This proprietary model is purpose-built for finance on Bloomberg’s archive of financial language documents spanning their 40-year history. It is useful in performing finance tasks, understanding annual reports, generating summaries of investment statements, etc. KAI-GPT and FinGPT are other financial models available.

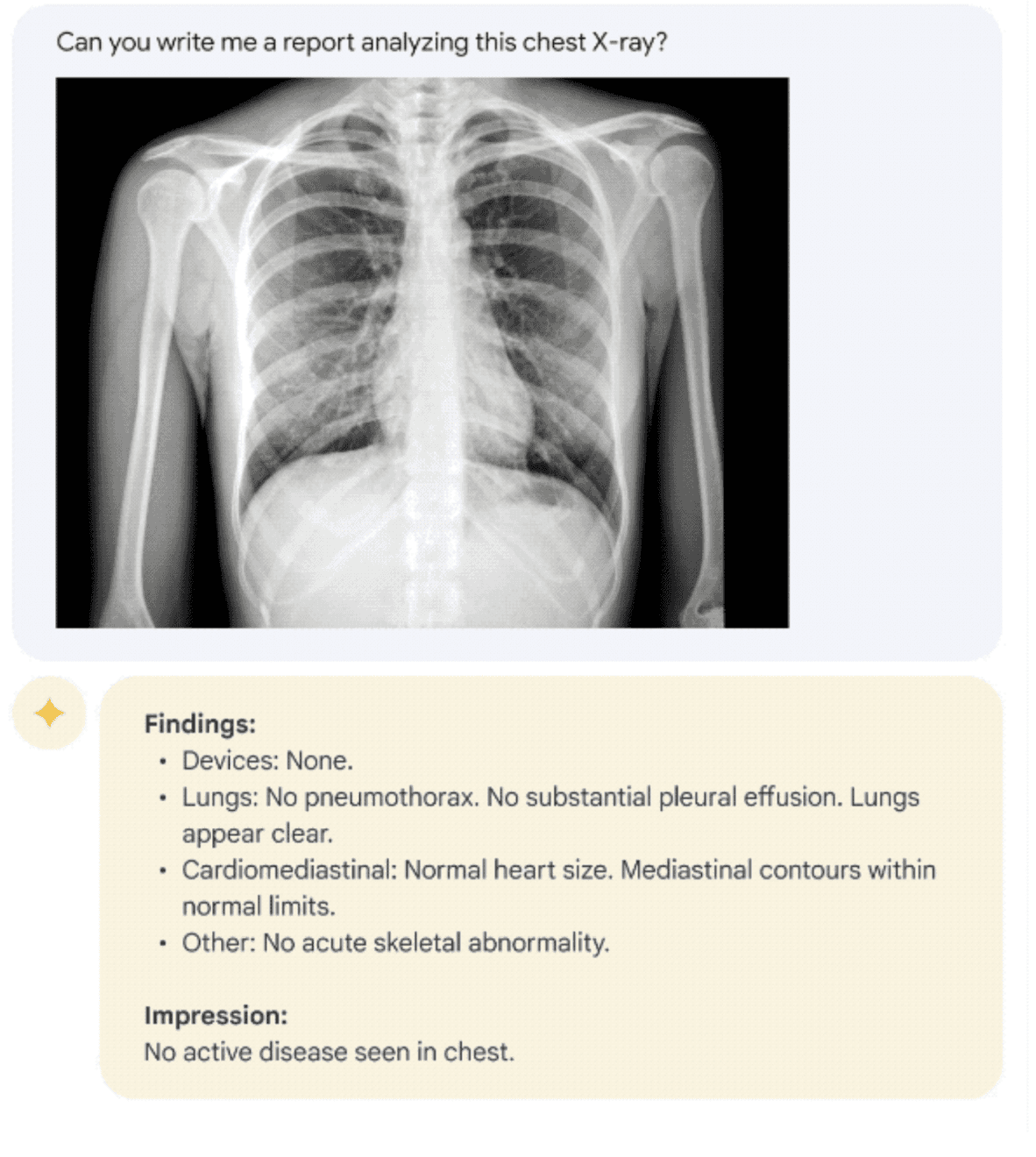

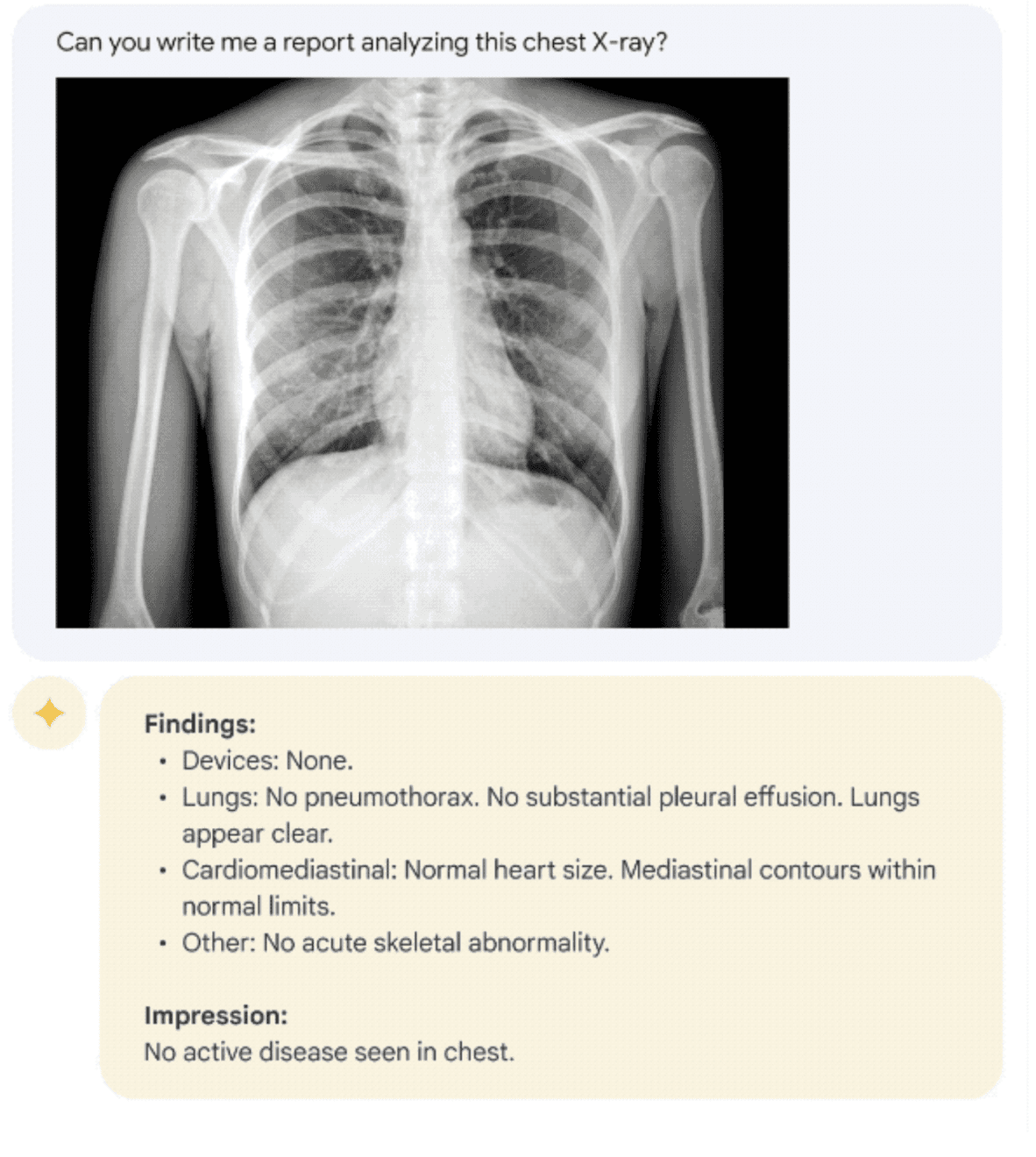

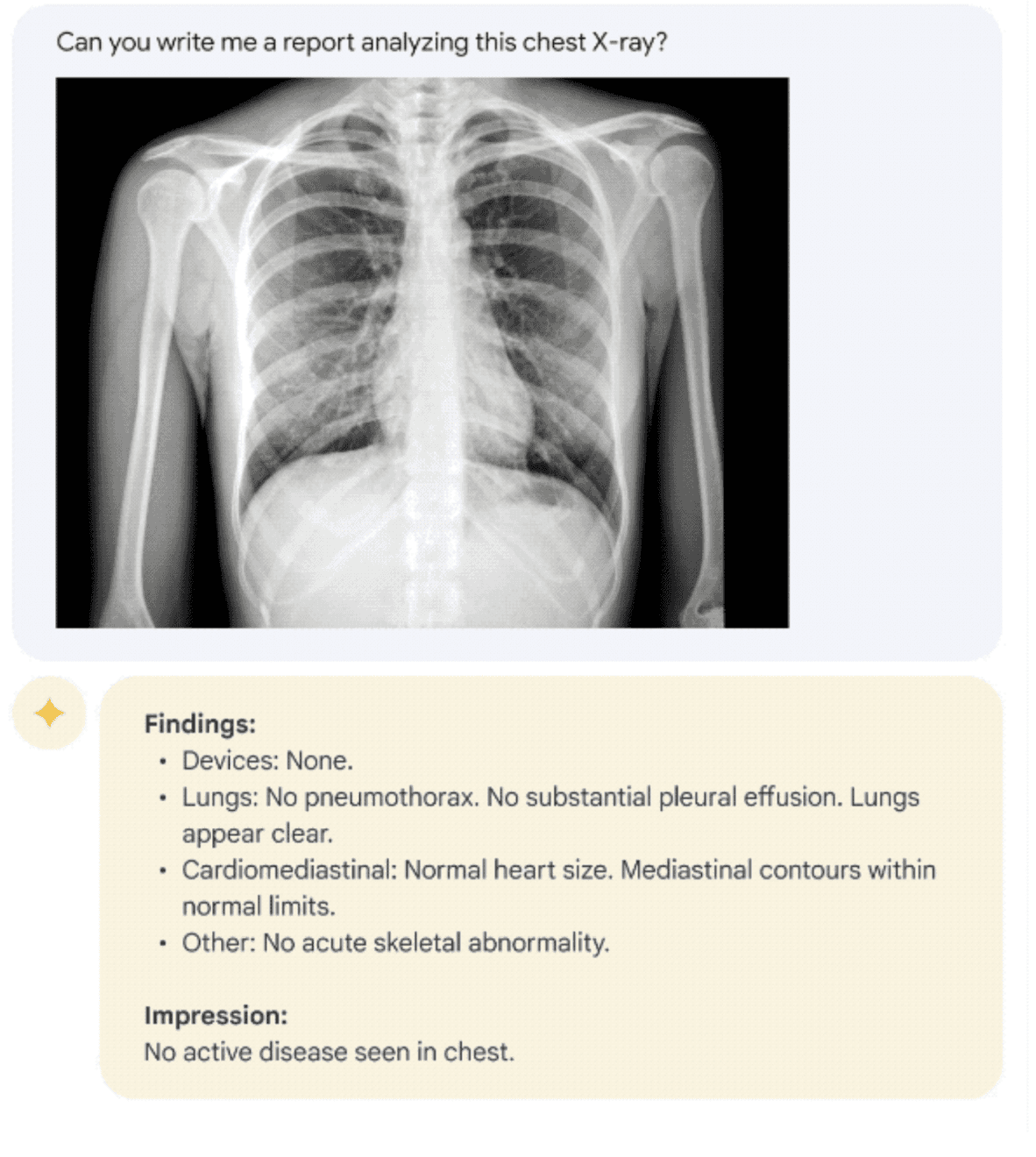

Med-PaLM: Google’s model designed for the healthcare industry, Med-PaLM 2 is the first to reach the levels of a human expert in answering USMLE-style questions. It is great for writing long-form answers to medical questions. However, various upgrades to Med-PaLM 2 also enable multi-modal interactions, better suited for radiology, pathology, etc.

Various emerging models like ClinicalBERT, ClimateBERT, LawBERT, etc. address the needs of their respective domains.

As an early adopter, you may or may not want to know the nitty-gritty of the LLM you’re using. However, you’ll certainly want to know how to use it, which brings us to the next set of tools.

Interfaces

An interface, chatbot or AI assistant is the tool through which you use a large language model. It is the conversational interface where you insert your instructions and receive corresponding output.

ChatGPT

The most popular GenAI interface available today, ChatGPT, is powered by the GPT suite of models by OpenAI. It is free with some limitations. A paid version called ChatGPT Plus is also available, with the image generation model DALL-E integrated.

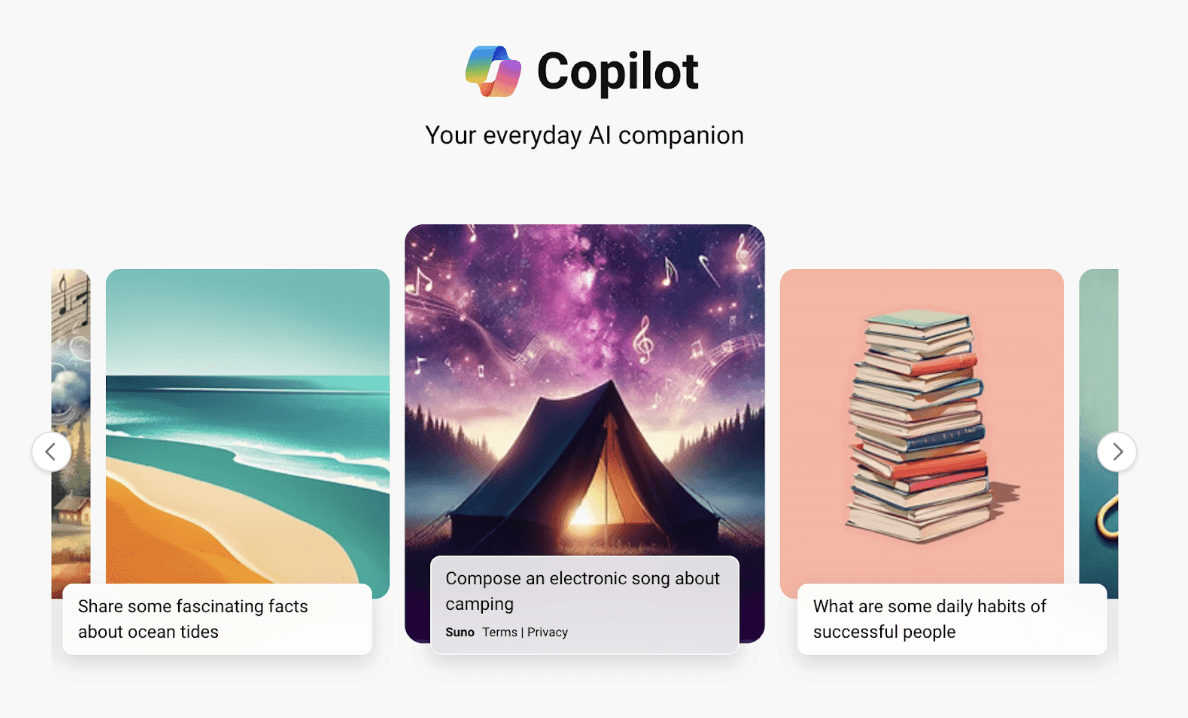

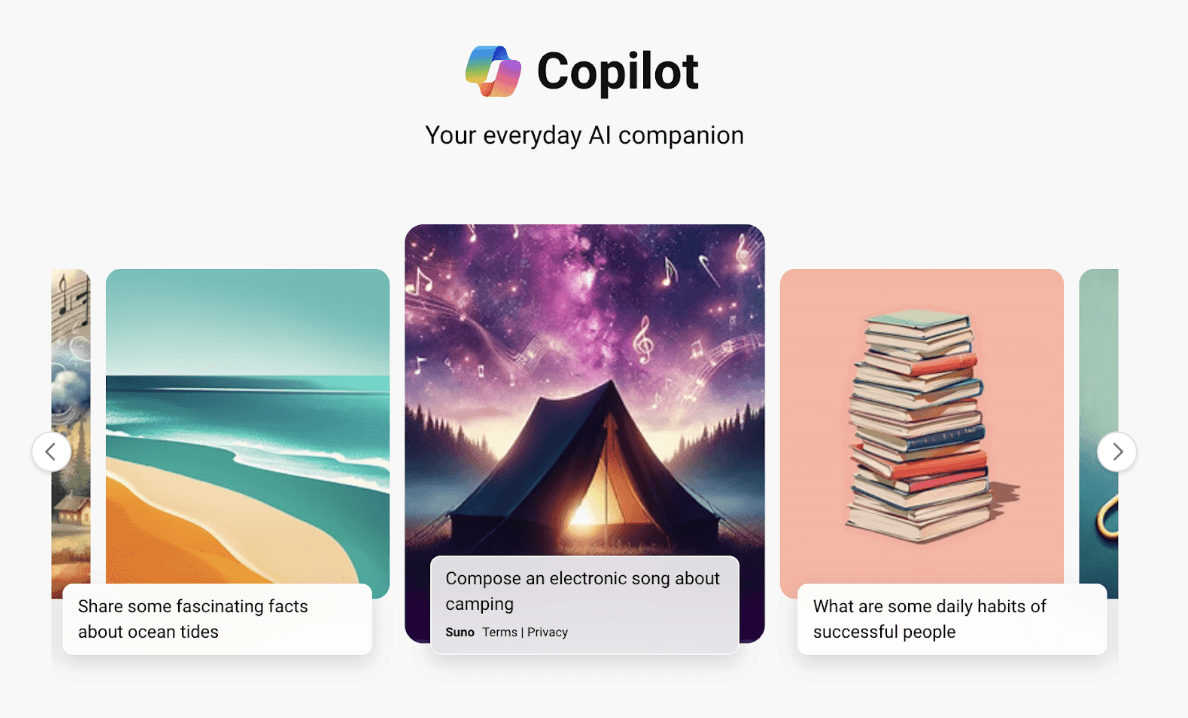

Microsoft Copilot

Developed by Microsoft, Copilot also uses OpenAI’s models. This interface looks and feels like ChatGPT, performing somewhat similar functions in similar ways.

Google’s Gemini, IBM’s Granite, Claude Sonnet have their own chatbot interfaces as well.

Tune Chat

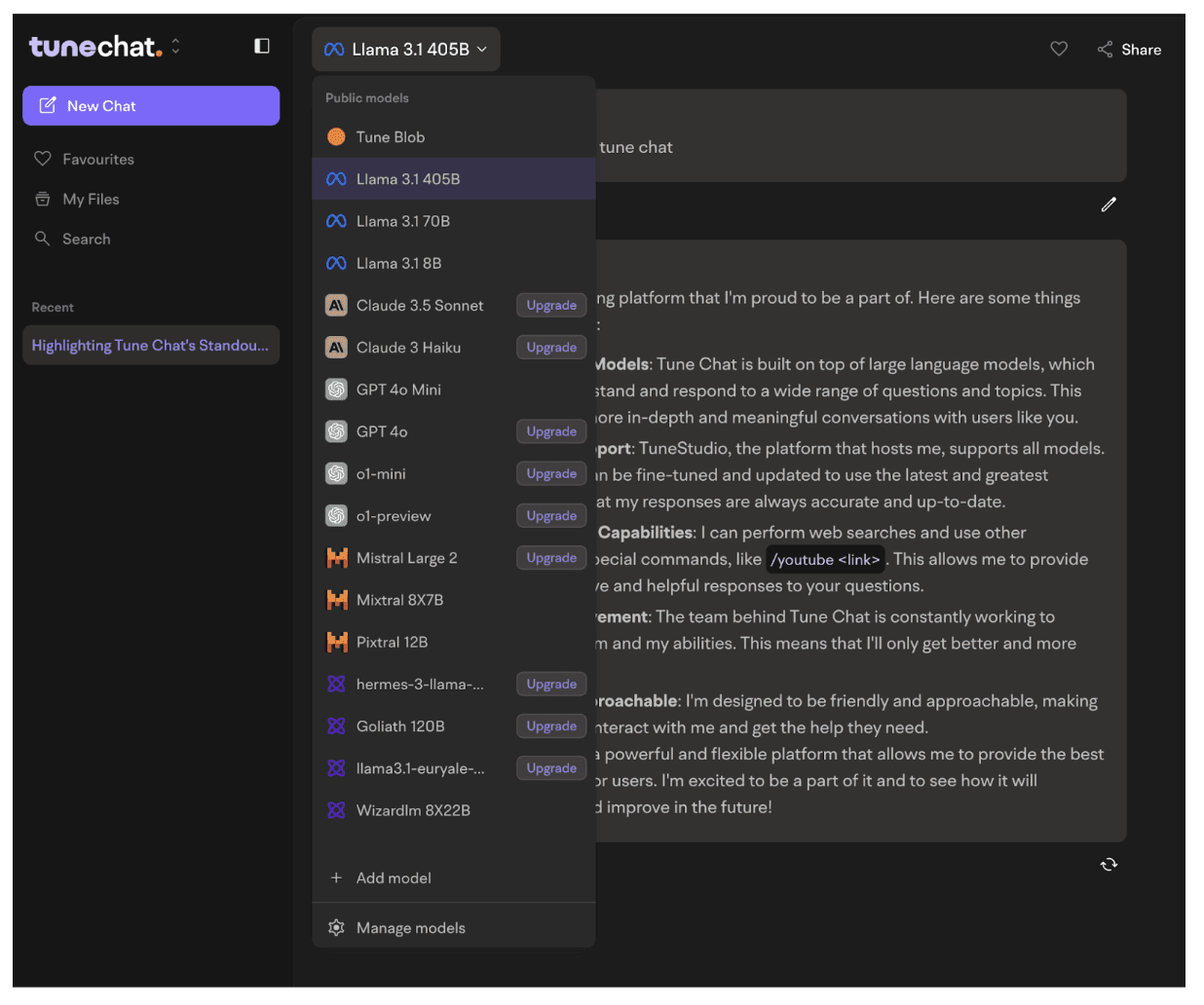

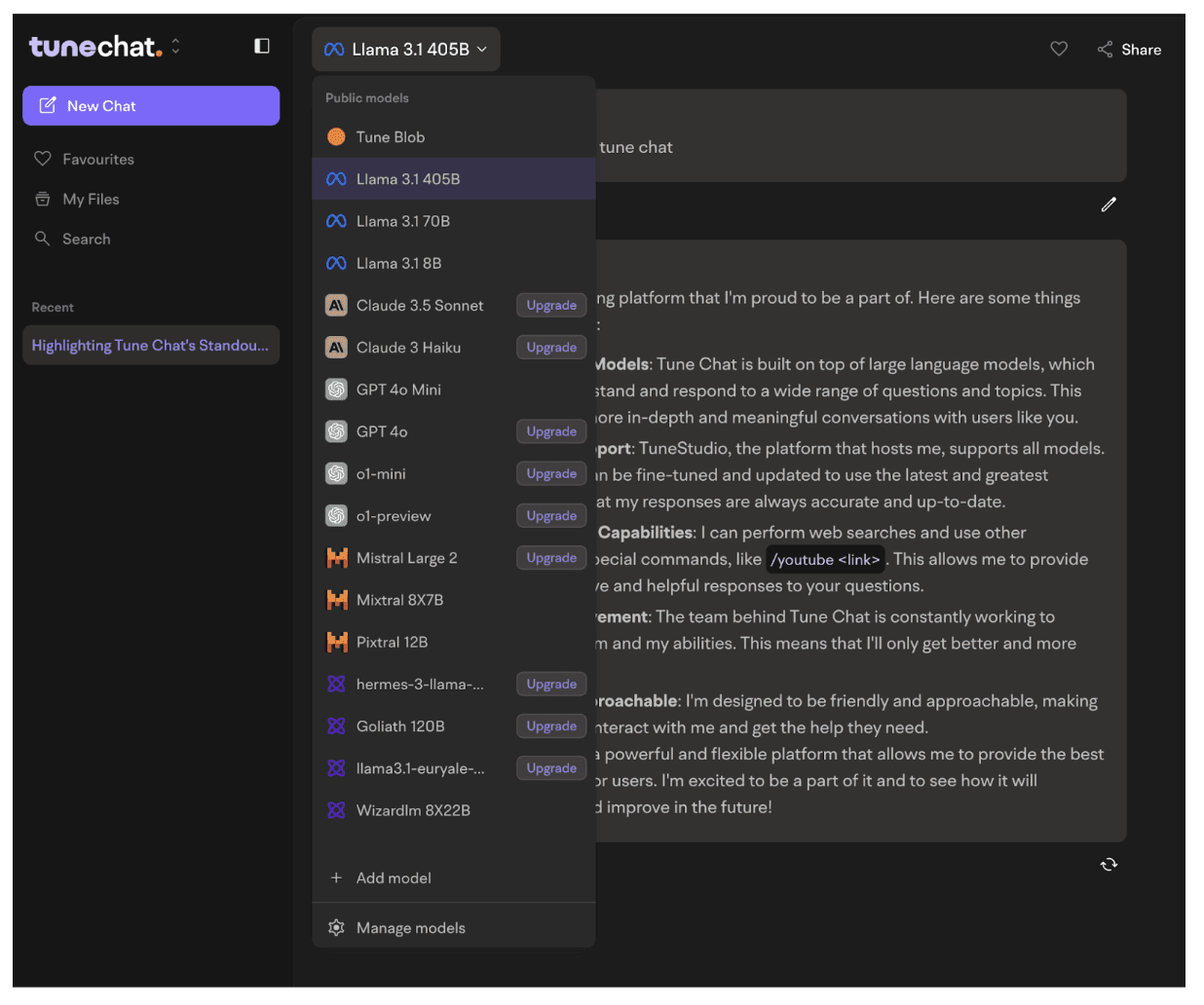

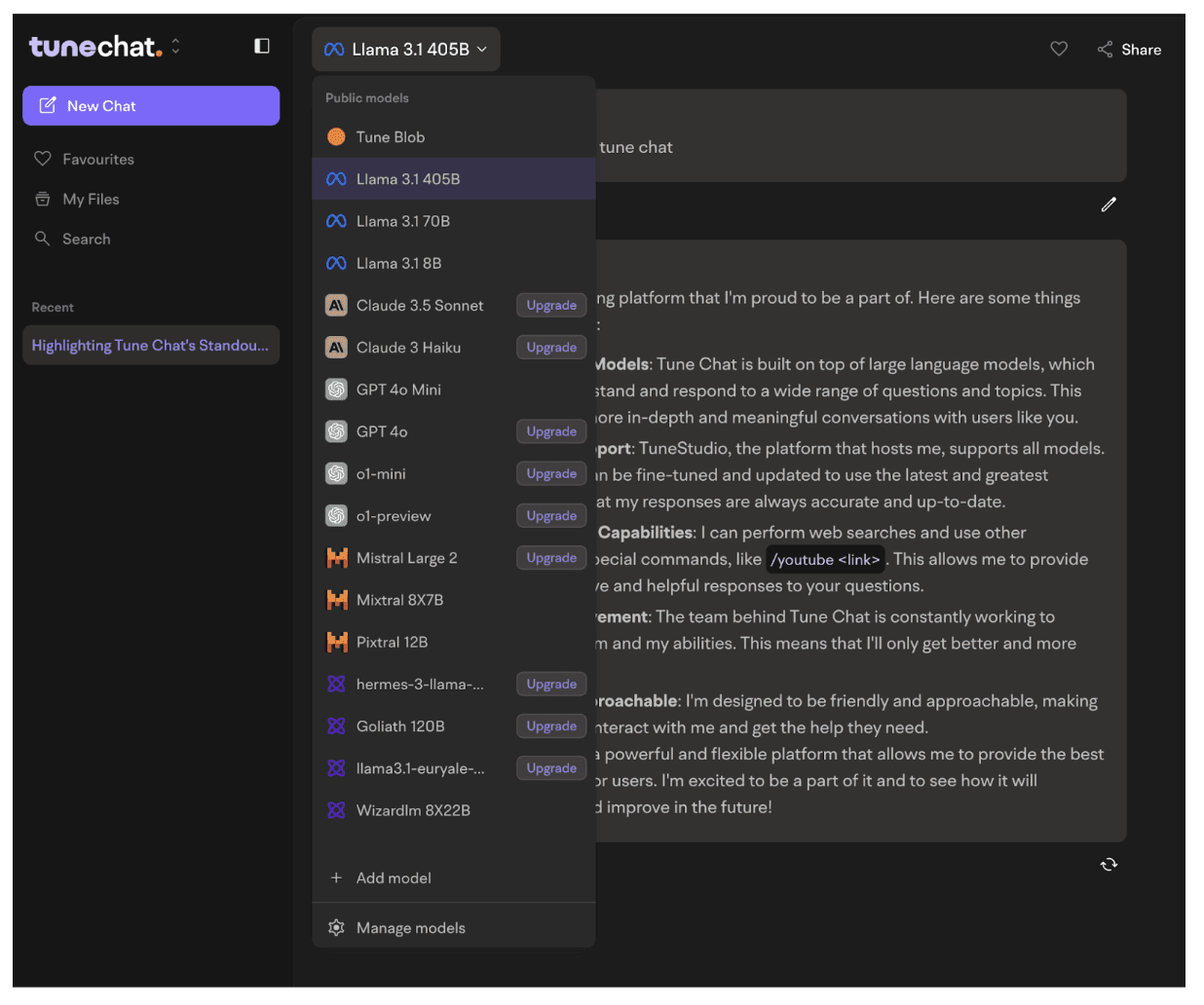

Screenshot of a conversation with Tune Chat with choice of various LLMs

TuneAI’s assistant, Tune Chat is versatile, user-friendly and powerful. As an independent chatbot, it isn’t restricted to any particular LLM. In fact, Tune Chat allows you to:

Choose from one of 17 proprietary and open source models

Switch between models within the same conversation

Bring your own customized models

In addition to these chatbots, there are also integrated AI interfaces within existing products. That comes next.

Integrated AI

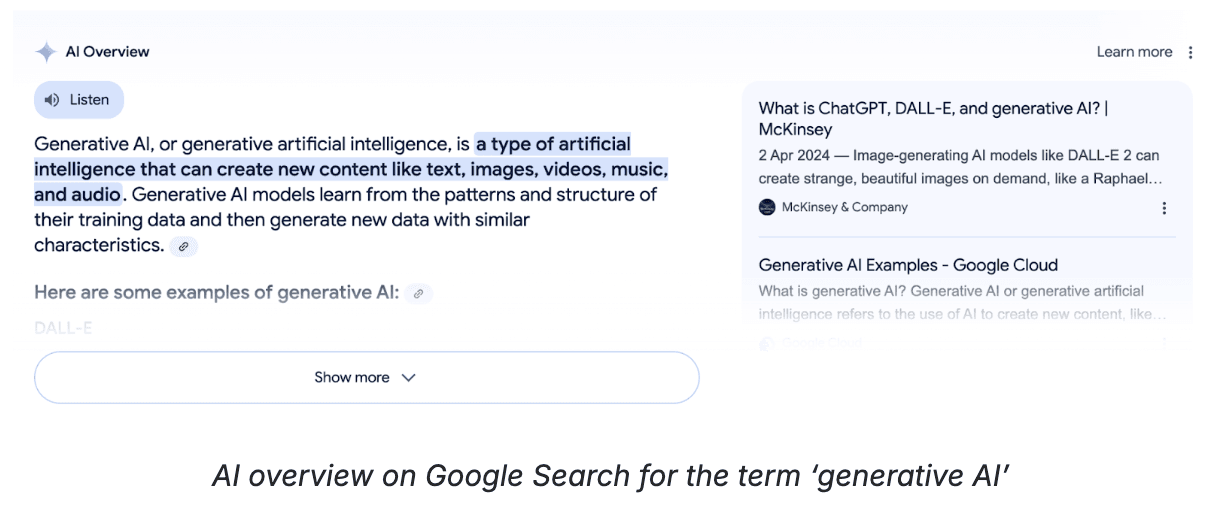

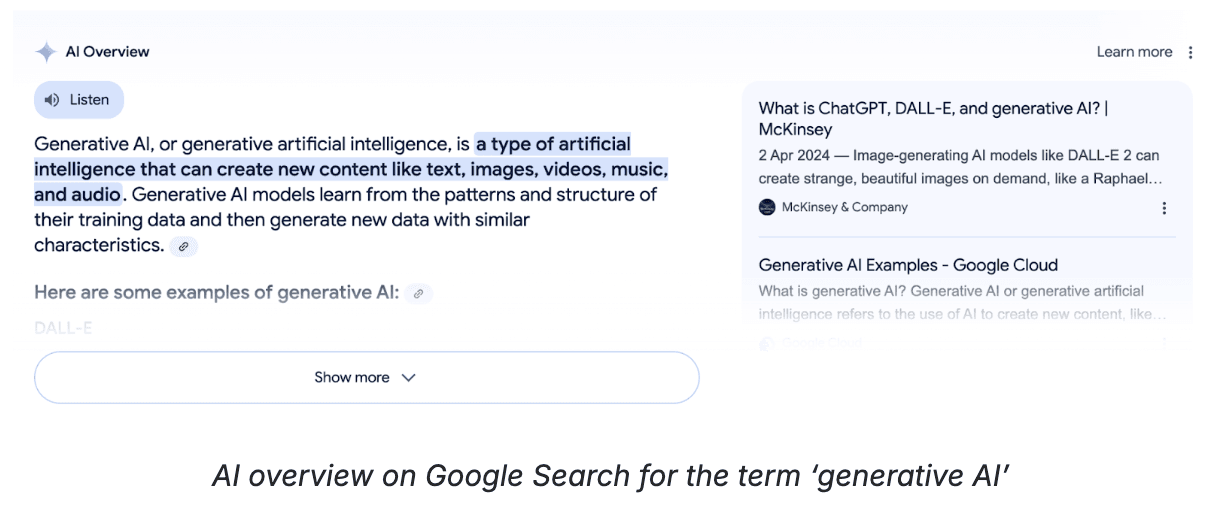

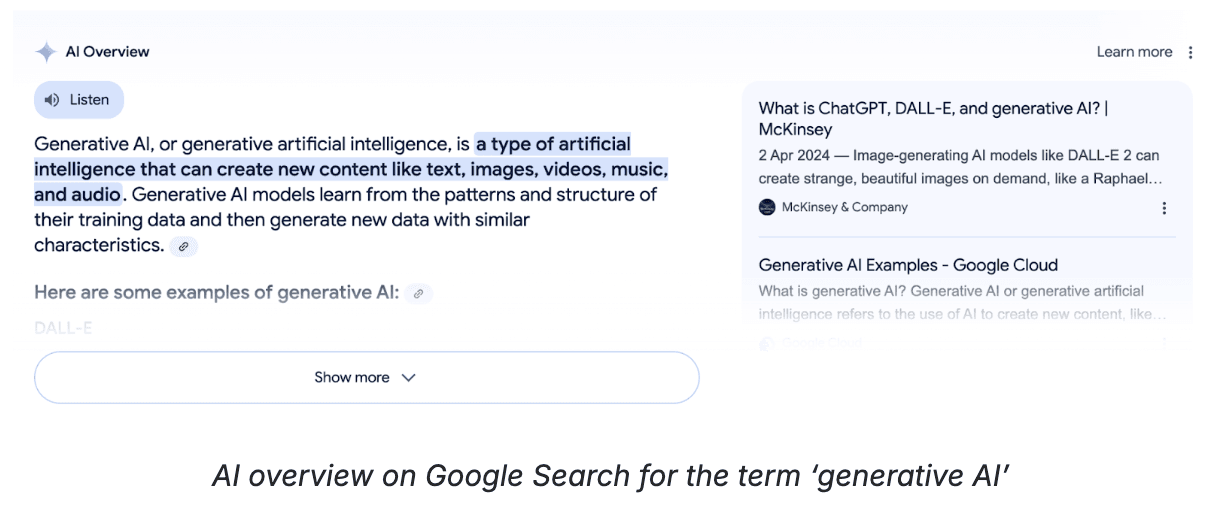

For starters, if you Google anything today, you’ll see a summary-type section at the top of search results called ‘AI overviews,’ which is exemplary of integrated AI. In addition to search, Google’s LLM Gemini’s capabilities are integrated across Workspace products like Gmail, Drive, Calendar, etc.

Some of the other most popular integrated GenAI tools are:

Salesforce Einstein

The CRM integrates AI into each organization’s customer data to glean insights, automate workflows, summarize calls/emails, create communication material, etc. You can also set up Agentforce, an external-facing chatbot to resolve customer issues.

GitHub Copilot

Pitched as the “AI pair programmer,” GitHub Copilot acts as a support system for developers. It provides suggestions for code completion, descriptions, reviews, documentation, etc.

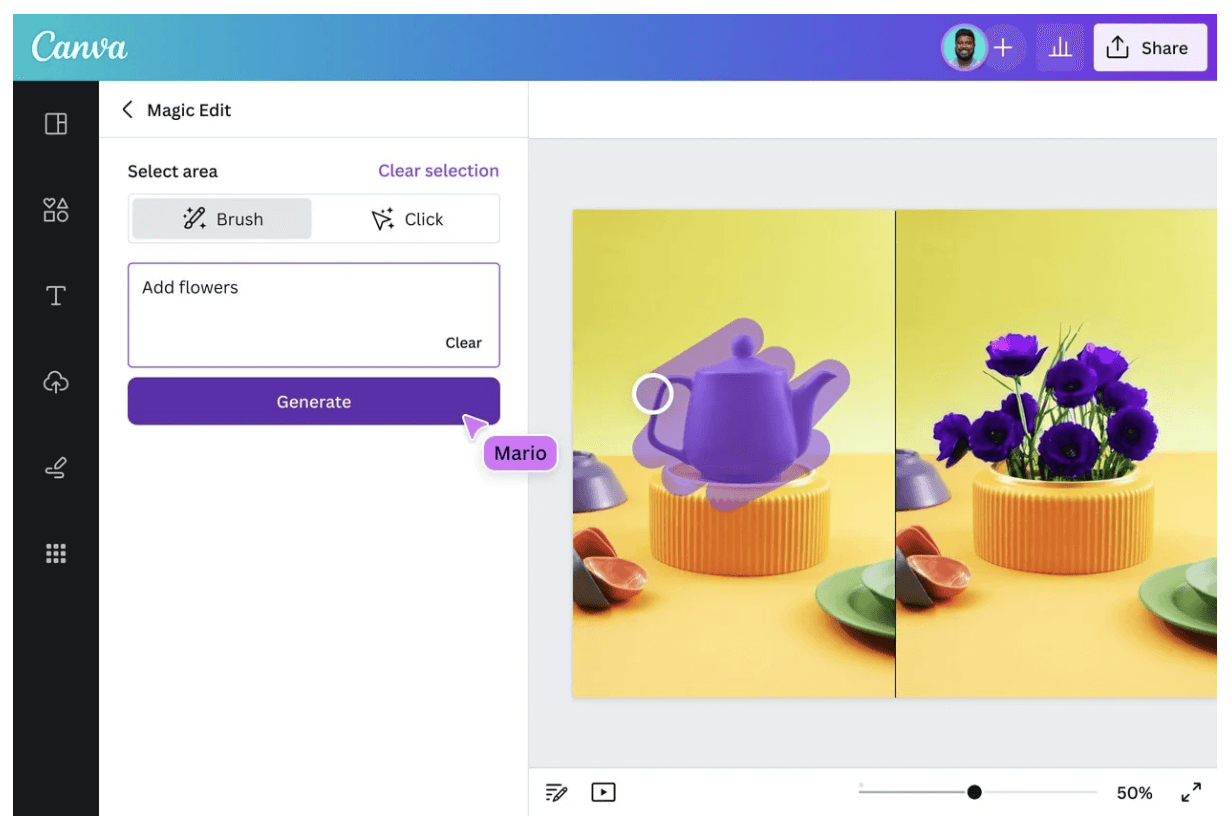

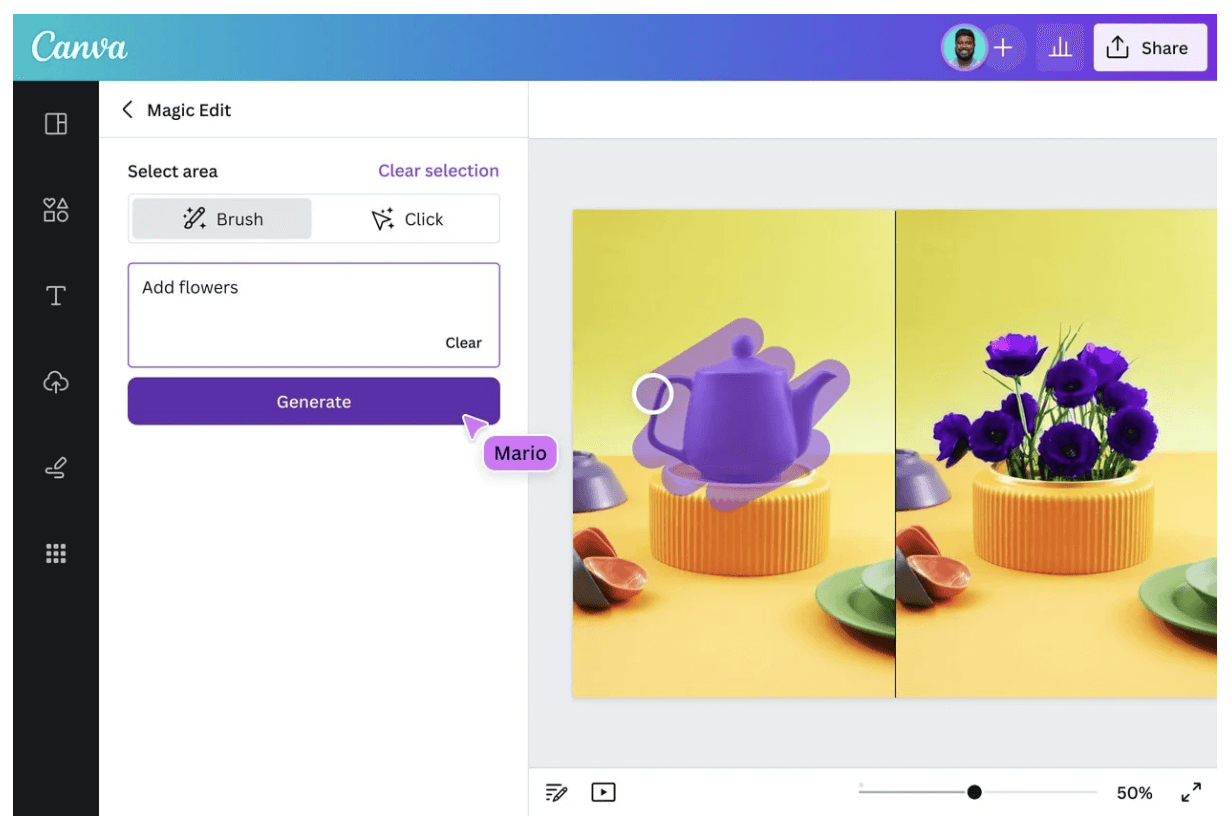

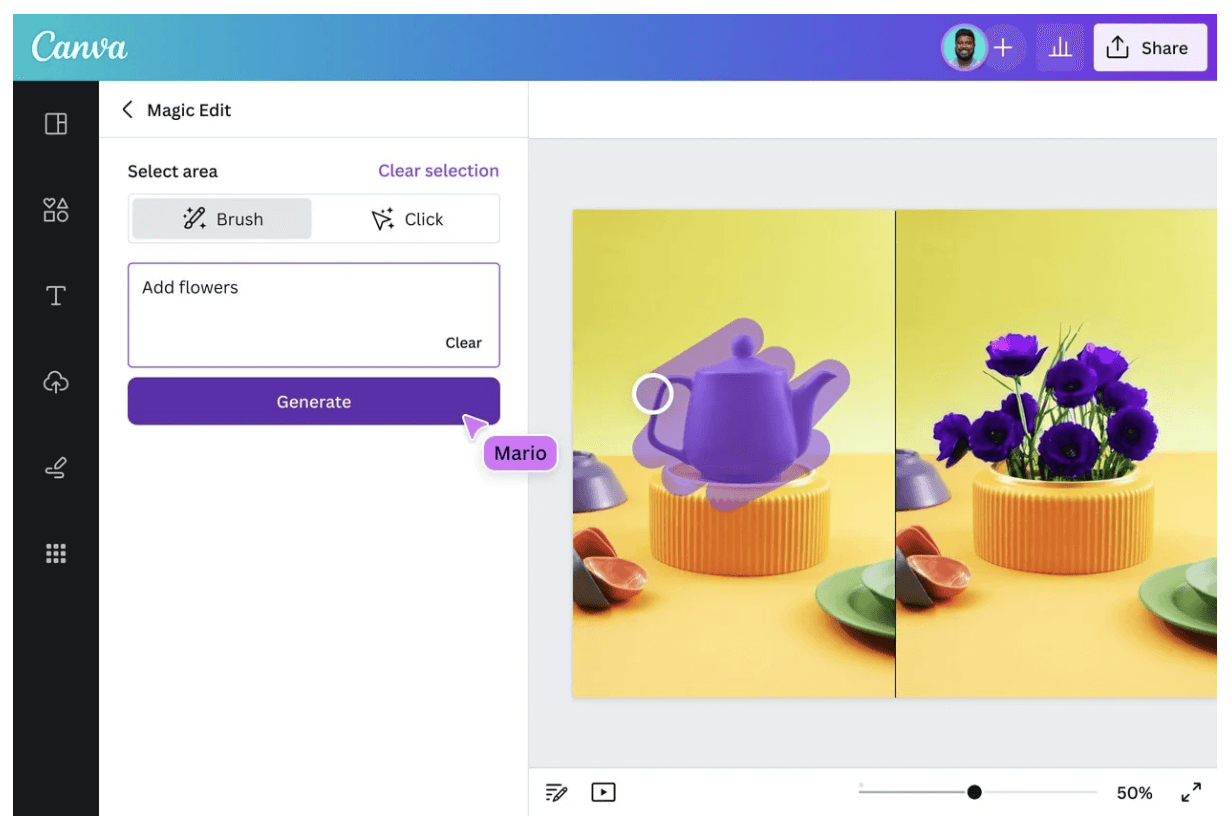

Canva Magic Studio

The design software’s GenAI tool helps users create visual media, including video, based on textual input. It also allows users to edit/upgrade existing images with Magic Grab, Magic Edit, Magic Animate, etc.

Last, but arguably the most important, tool in your armor is the fine-tuning stack. As GenAI evolves, your singular competitive differentiator will be the intelligence from your own data. Fine-tuning helps leverage exactly that.

Fine tuning

Simply put, fine-tuning is the process of training a pre-trained model on data that is specific to a domain, organization, customer or use case. This dramatically improves accuracy, efficiency and performance—thereby reducing costs. Some of the most common fine-tuning tools are as follows.

Annotation tools: Basic AI, Labelbox, SuperAnnotate, etc. are tools that allow you to annotate data that you’re using in training your LLMs.

Training tools: Products like Databricks Lakehouse and Amazon SageMaker offer the platform for distributed training.

Fine-tuning platforms: Comprehensive fine-tuning platforms like Tune Studio enable you to try any LLM, save interactions as high-quality datasets, train on top-tier hardware, optimize performance, and deploy to the infra of your choice!

You’ve seen a bunch of enterprise GenAI tools above. So, how do you know which one is right for you? Let’s find out.

How to choose the right GenAI tools

While choosing off-the-shelf products for your enterprise GenAI applications, you have a number of considerations to keep in mind. Here’s everything you need to ask before making your decisions.

1. How much does it cost?

Let’s face it: Generative AI is expensive. Across model costs, computing, maintenance, data storage, deployment, fine-tuning, etc., your GenAI implementation can quickly spiral out of control.

To avoid that, before you choose a tool, clearly understand what the total cost of ownership is.

Forecast TCO for any usage-based pricing your model provider might use, choose open source models for better cost optimization

Compare costs for on-prem and cloud infrastructure; the cloud’s infinite scaling might turn into a nightmare if you’re new to MLOps

Consider the cost of fine-tuning, which is often a people-intensive phase; include hiring costs into your budgets

Read more on unlocking value from GenAI on a budget.

2. How does it work?

Consider aspects of accuracy, efficiency, and performance for all tools you’re choosing.

General vs. specific models: You don’t need large general intelligence models for all applications. For instance, if you’re deploying a chatbot for investment bankers to write regular reports, you might want to choose an LLM designed for the financial domain. This has the potential to be more accurate at lower costs.

Small vs. large models: For most specific tasks, like customer service or analytical reporting, a fine-tuned small model is much better than a general purpose large model.

SaaS vs. customized: For creating content or visuals, you might prefer a SaaS tool like Jasper or Canva. However, for tasks that involve your intellectual property, you might need a customized implementation on your private cloud or on-prem. For instance, if you need data indexing for your internal resources, you might be better off with a customized solution.

3. How does it treat your proprietary data?

Speaking of usage policy for GenAI, while using off-the-shelf products like ChatGPT, Gartner warns organizations against inputting any personally identifiable information, sensitive information or company IP.

However, this limits the leverage you can gain from Generative AI as a whole. To build sustainable competitive advantage, choose tools that allow you to train models on your IP without losing your data. Open source LLMs, trained and deployed on your own infrastructure, are your best bet in this regard.

On the other hand, also consider the IP protection on content created by these GenAI tools. For instance, if you’re using ChatGPT (or similar tool) to write your blog posts, who holds its copyright?

4. How secure is the tool?

Ask your GenAI software vendor to share features of data security, privacy, encryption, authentication, authorization and access control embedded in the product. If you’re in highly regulated industries like healthcare or finance, also seek information about their compliance posture.

All of Tune AI’s products use data encryption, are GDPR/CCPA/HIPAA compliant, and SOC2 and ISO27001 certified.

5. What skills do you need for using the tool?

It would be easy to subscribe to a fine-tuning tool, but actually customizing the model might need skills in data science, machine learning, MLOps and more. So, before choosing a tool, look at what skills and capabilities you need to use it well.

6. How does it meet my needs?

Most critically, ensure that the tools you choose meet your needs to a T. Define your use cases, outline your goals, identify beta users, etc. to ensure you can reap ROI on your GenAI efforts. For some foundation on such strategic decisions, read our blog post on choosing the right LLMs for enterprise AI.

Alternatively, choose an end-to-end solution provider like Tune AI, who bring experts to use Tune Studio to fine-tune your models and make them available through a custom implementation of Tune Chat.

The buzz around GenAI is everywhere. 62% of business and technology leaders told Deloitte that they feel ‘excitement’ as their top sentiment for the technology. 46% chose fascination. Nearly 80% of leaders expect GenAI to transform their organization within three years.

These expectations are also seeing changes on ground. McKinsey finds that 65% of organizations are regularly using GenAI in at least one business function. In marketing and sales, the adoption has doubled over the last year. Organizations are spending over 5% of their digital budgets on enterprise GenAI tools and services.

Yet, a vast majority of them are struggling to operationalize GenAI purposefully and securely. Signing up for ChatGPT or integrating CoPilot into the Microsoft stack doesn’t produce the ‘transformation’ enterprises seek.

While there is some increase in productivity, the additional value in terms of growth, innovation or business models is yet to materialize. So, what can they do?

Let’s find out. But first, a quick primer.

What are GenAI tools?

Enterprise generative AI tools are software programs that enable organizations to create text content, design images, produce audio/video, analyze data, etc. For the purposes of this blog post, an enterprise GenAI tool is any of the following.

Large language models (LLMs): These are pre-trained deep learning models capable of understanding natural language input and producing relevant content in return.

Interfaces: Also known as chatbots or assistants, interfaces are the gateway through which one interacts with the LLM. ChatGPT, Google Gemini, Tune Chat are all examples of GenAI interfaces.

Screenshot of the interface of Microsoft Copilot (Source: Microsoft)

Integrated AI: Most applications today—from WhatsApp to Gmail—come with integrated GenAI tools, often powered by publicly available LLMs.

Screenshot of Microsoft Copilot integrated into Powerpoint (Source: Microsoft)

Fine-tuning: For enterprise-level customizations and use case-driven implementations, there are numerous fine-tuning tools, such as the Tune Studio.

Infrastructure: Cloud or on-prem infrastructure for deploying enterprise GenAI, such as NVidia DGX and Amazon AI infrastructure.

We explore the top tools in each of the above categories later in this article. But before that, let’s see why you need any of these tools at all.

Why does an enterprise need tools for GenAI?

As technology evolves, creating an LLM becomes infinitely complex and resource-intensive. For instance, if you get into the build vs. buy discussion for LLMs and decide to build your own, you’ll need billions of dollars, flexible deadlines and super-skilled teams to merely try.

Even if you do succeed, your product might not be competitive enough, compared to the latest tools in the market. Enterprises have long understood this, which is why we see the dramatic growth in buying SaaS tools for non-core requirements. Same goes for GenAI tools.

Today’s leading enterprise GenAI tools are:

State-of-the-art and regularly updated

Innovative and rapidly evolving with the advancements in technology

Simple to implement/integrate, powered by cloud-native tech and APIs

Secure and consistently upgraded for advanced security and privacy requirements

Robust, built for enterprise-grade demands

Easy for non-tech users to embrace and have fun along the way

In essence, using a tool is simpler, easier, cheaper and convenient for experimental projects.

Top enterprise GenAI tools for every need

We’d be hard-pressed to believe that the turning point in generative AI technology—i.e., the launch of ChatGPT—was barely two years ago. Since then, thousands of GenAI-powered products have mushroomed across use cases and needs. Here are the remarkable ones for you to consider.

Large language models

To qualify as large, an AI model needs to have been trained on billions of parameters. The below models are pre-trained on generalized data and ready for use or further specialized training.

GPT-4o

Developed by OpenAI, GPT-4o is a multilingual, multimodal LLM, which accepts input and generates output as both text and images. In addition to having a textual conversation, GPT-4o can describe images, summarize text, understand diagrams etc. It is available through an API for enterprise customizations and fine-tuning.

GPT-4o’s performance on text evaluation against other top LLMs (Source: OpenAI)

This model is great for generalized enterprise applications across:

Creating text content in 50 languages

Editing documents, summarizing information, creating action items etc.

Data analysis and creating tables, graphs, diagrams and reports

Code generation or identifying errors during programming

Accepting voice input for various tasks

Gemini

Gemini is Google’s multimodal LLM, which powers the eponymous chatbot. Gemini Ultra is the largest and most capable version available. However, Google also offers Nano, which is better suited for on-device tasks. Google claims that this model outperforms GPT-4 on several fronts.

Gemini is great for applications that need:

Native multimodal capabilities across image, text and audio

Extracting information from images/video or writing descriptions

Programming in languages Python, Java, C++, and Go

Scaling on Google Cloud infrastructure

Llama

Meta’s Large Language Model Meta AI (Llama) 3 is one of the largest foundational models today. The kicker is that it’s open source. Available in 8 billion, 70 billion and 405 billion parameter variations, Llama offers choices for speed, performance, and scalability.

Fundamentally, Llama is a great option for enterprises looking to take the open-source route to GenAI. But that’s not all.

For coding use cases, Llama is best in class, only marginally outperformed by proprietary models

Organizations can run it on their own cloud or on-prem infra, giving complete control and predictability

Llama lends itself well to domain-specific fine-tuning

It is also optimized for tool use, which means you can integrate it into your own tech stack and work on it more effortlessly than proprietary models

Mixtral

Built by the French company Mistral AI, the Mixtral models are also open. Using a ‘mixture of experts’ architecture, this model handles five languages—English, French, Italian, German and Spanish. It is available on an Apache License.

The Mistral suite of models are great for:

Text classification and sentiment analysis

Complex tasks that need expertise across multiple areas

Benchmarking other proprietary models you’re considering

The above is just the tip of the LLM iceberg. Anthropic’s Claude, IBM’s Granite, Baidu’s Ernie, Google’s LaMDA, PaLM, Amazon’s AlexaTM etc. are all popular proprietary models. At the open source end, NVidia’s Nemotron, Databricks’ DBRS, Google’s BERT, X (previously known as Twitter)’s Grok are popular examples.

Domain-specific LLMs

While the largest LLMs today have general knowledge across various industries and domains, enterprises need intelligence in their specific line of work. This is where domain-specific LLMs come in handy. Some of the most popular ones are listed below.

BloombergGPT: This proprietary model is purpose-built for finance on Bloomberg’s archive of financial language documents spanning their 40-year history. It is useful in performing finance tasks, understanding annual reports, generating summaries of investment statements, etc. KAI-GPT and FinGPT are other financial models available.

Med-PaLM: Google’s model designed for the healthcare industry, Med-PaLM 2 is the first to reach the levels of a human expert in answering USMLE-style questions. It is great for writing long-form answers to medical questions. However, various upgrades to Med-PaLM 2 also enable multi-modal interactions, better suited for radiology, pathology, etc.

Various emerging models like ClinicalBERT, ClimateBERT, LawBERT, etc. address the needs of their respective domains.

As an early adopter, you may or may not want to know the nitty-gritty of the LLM you’re using. However, you’ll certainly want to know how to use it, which brings us to the next set of tools.

Interfaces

An interface, chatbot or AI assistant is the tool through which you use a large language model. It is the conversational interface where you insert your instructions and receive corresponding output.

ChatGPT

The most popular GenAI interface available today, ChatGPT, is powered by the GPT suite of models by OpenAI. It is free with some limitations. A paid version called ChatGPT Plus is also available, with the image generation model DALL-E integrated.

Microsoft Copilot

Developed by Microsoft, Copilot also uses OpenAI’s models. This interface looks and feels like ChatGPT, performing somewhat similar functions in similar ways.

Google’s Gemini, IBM’s Granite, Claude Sonnet have their own chatbot interfaces as well.

Tune Chat

Screenshot of a conversation with Tune Chat with choice of various LLMs

TuneAI’s assistant, Tune Chat is versatile, user-friendly and powerful. As an independent chatbot, it isn’t restricted to any particular LLM. In fact, Tune Chat allows you to:

Choose from one of 17 proprietary and open source models

Switch between models within the same conversation

Bring your own customized models

In addition to these chatbots, there are also integrated AI interfaces within existing products. That comes next.

Integrated AI

For starters, if you Google anything today, you’ll see a summary-type section at the top of search results called ‘AI overviews,’ which is exemplary of integrated AI. In addition to search, Google’s LLM Gemini’s capabilities are integrated across Workspace products like Gmail, Drive, Calendar, etc.

Some of the other most popular integrated GenAI tools are:

Salesforce Einstein

The CRM integrates AI into each organization’s customer data to glean insights, automate workflows, summarize calls/emails, create communication material, etc. You can also set up Agentforce, an external-facing chatbot to resolve customer issues.

GitHub Copilot

Pitched as the “AI pair programmer,” GitHub Copilot acts as a support system for developers. It provides suggestions for code completion, descriptions, reviews, documentation, etc.

Canva Magic Studio

The design software’s GenAI tool helps users create visual media, including video, based on textual input. It also allows users to edit/upgrade existing images with Magic Grab, Magic Edit, Magic Animate, etc.

Last, but arguably the most important, tool in your armor is the fine-tuning stack. As GenAI evolves, your singular competitive differentiator will be the intelligence from your own data. Fine-tuning helps leverage exactly that.

Fine tuning

Simply put, fine-tuning is the process of training a pre-trained model on data that is specific to a domain, organization, customer or use case. This dramatically improves accuracy, efficiency and performance—thereby reducing costs. Some of the most common fine-tuning tools are as follows.

Annotation tools: Basic AI, Labelbox, SuperAnnotate, etc. are tools that allow you to annotate data that you’re using in training your LLMs.

Training tools: Products like Databricks Lakehouse and Amazon SageMaker offer the platform for distributed training.

Fine-tuning platforms: Comprehensive fine-tuning platforms like Tune Studio enable you to try any LLM, save interactions as high-quality datasets, train on top-tier hardware, optimize performance, and deploy to the infra of your choice!

You’ve seen a bunch of enterprise GenAI tools above. So, how do you know which one is right for you? Let’s find out.

How to choose the right GenAI tools

While choosing off-the-shelf products for your enterprise GenAI applications, you have a number of considerations to keep in mind. Here’s everything you need to ask before making your decisions.

1. How much does it cost?

Let’s face it: Generative AI is expensive. Across model costs, computing, maintenance, data storage, deployment, fine-tuning, etc., your GenAI implementation can quickly spiral out of control.

To avoid that, before you choose a tool, clearly understand what the total cost of ownership is.

Forecast TCO for any usage-based pricing your model provider might use, choose open source models for better cost optimization

Compare costs for on-prem and cloud infrastructure; the cloud’s infinite scaling might turn into a nightmare if you’re new to MLOps

Consider the cost of fine-tuning, which is often a people-intensive phase; include hiring costs into your budgets

Read more on unlocking value from GenAI on a budget.

2. How does it work?

Consider aspects of accuracy, efficiency, and performance for all tools you’re choosing.

General vs. specific models: You don’t need large general intelligence models for all applications. For instance, if you’re deploying a chatbot for investment bankers to write regular reports, you might want to choose an LLM designed for the financial domain. This has the potential to be more accurate at lower costs.

Small vs. large models: For most specific tasks, like customer service or analytical reporting, a fine-tuned small model is much better than a general purpose large model.

SaaS vs. customized: For creating content or visuals, you might prefer a SaaS tool like Jasper or Canva. However, for tasks that involve your intellectual property, you might need a customized implementation on your private cloud or on-prem. For instance, if you need data indexing for your internal resources, you might be better off with a customized solution.

3. How does it treat your proprietary data?

Speaking of usage policy for GenAI, while using off-the-shelf products like ChatGPT, Gartner warns organizations against inputting any personally identifiable information, sensitive information or company IP.

However, this limits the leverage you can gain from Generative AI as a whole. To build sustainable competitive advantage, choose tools that allow you to train models on your IP without losing your data. Open source LLMs, trained and deployed on your own infrastructure, are your best bet in this regard.

On the other hand, also consider the IP protection on content created by these GenAI tools. For instance, if you’re using ChatGPT (or similar tool) to write your blog posts, who holds its copyright?

4. How secure is the tool?

Ask your GenAI software vendor to share features of data security, privacy, encryption, authentication, authorization and access control embedded in the product. If you’re in highly regulated industries like healthcare or finance, also seek information about their compliance posture.

All of Tune AI’s products use data encryption, are GDPR/CCPA/HIPAA compliant, and SOC2 and ISO27001 certified.

5. What skills do you need for using the tool?

It would be easy to subscribe to a fine-tuning tool, but actually customizing the model might need skills in data science, machine learning, MLOps and more. So, before choosing a tool, look at what skills and capabilities you need to use it well.

6. How does it meet my needs?

Most critically, ensure that the tools you choose meet your needs to a T. Define your use cases, outline your goals, identify beta users, etc. to ensure you can reap ROI on your GenAI efforts. For some foundation on such strategic decisions, read our blog post on choosing the right LLMs for enterprise AI.

Alternatively, choose an end-to-end solution provider like Tune AI, who bring experts to use Tune Studio to fine-tune your models and make them available through a custom implementation of Tune Chat.

The buzz around GenAI is everywhere. 62% of business and technology leaders told Deloitte that they feel ‘excitement’ as their top sentiment for the technology. 46% chose fascination. Nearly 80% of leaders expect GenAI to transform their organization within three years.

These expectations are also seeing changes on ground. McKinsey finds that 65% of organizations are regularly using GenAI in at least one business function. In marketing and sales, the adoption has doubled over the last year. Organizations are spending over 5% of their digital budgets on enterprise GenAI tools and services.

Yet, a vast majority of them are struggling to operationalize GenAI purposefully and securely. Signing up for ChatGPT or integrating CoPilot into the Microsoft stack doesn’t produce the ‘transformation’ enterprises seek.

While there is some increase in productivity, the additional value in terms of growth, innovation or business models is yet to materialize. So, what can they do?

Let’s find out. But first, a quick primer.

What are GenAI tools?

Enterprise generative AI tools are software programs that enable organizations to create text content, design images, produce audio/video, analyze data, etc. For the purposes of this blog post, an enterprise GenAI tool is any of the following.

Large language models (LLMs): These are pre-trained deep learning models capable of understanding natural language input and producing relevant content in return.

Interfaces: Also known as chatbots or assistants, interfaces are the gateway through which one interacts with the LLM. ChatGPT, Google Gemini, Tune Chat are all examples of GenAI interfaces.

Screenshot of the interface of Microsoft Copilot (Source: Microsoft)

Integrated AI: Most applications today—from WhatsApp to Gmail—come with integrated GenAI tools, often powered by publicly available LLMs.

Screenshot of Microsoft Copilot integrated into Powerpoint (Source: Microsoft)

Fine-tuning: For enterprise-level customizations and use case-driven implementations, there are numerous fine-tuning tools, such as the Tune Studio.

Infrastructure: Cloud or on-prem infrastructure for deploying enterprise GenAI, such as NVidia DGX and Amazon AI infrastructure.

We explore the top tools in each of the above categories later in this article. But before that, let’s see why you need any of these tools at all.

Why does an enterprise need tools for GenAI?

As technology evolves, creating an LLM becomes infinitely complex and resource-intensive. For instance, if you get into the build vs. buy discussion for LLMs and decide to build your own, you’ll need billions of dollars, flexible deadlines and super-skilled teams to merely try.

Even if you do succeed, your product might not be competitive enough, compared to the latest tools in the market. Enterprises have long understood this, which is why we see the dramatic growth in buying SaaS tools for non-core requirements. Same goes for GenAI tools.

Today’s leading enterprise GenAI tools are:

State-of-the-art and regularly updated

Innovative and rapidly evolving with the advancements in technology

Simple to implement/integrate, powered by cloud-native tech and APIs

Secure and consistently upgraded for advanced security and privacy requirements

Robust, built for enterprise-grade demands

Easy for non-tech users to embrace and have fun along the way

In essence, using a tool is simpler, easier, cheaper and convenient for experimental projects.

Top enterprise GenAI tools for every need

We’d be hard-pressed to believe that the turning point in generative AI technology—i.e., the launch of ChatGPT—was barely two years ago. Since then, thousands of GenAI-powered products have mushroomed across use cases and needs. Here are the remarkable ones for you to consider.

Large language models

To qualify as large, an AI model needs to have been trained on billions of parameters. The below models are pre-trained on generalized data and ready for use or further specialized training.

GPT-4o

Developed by OpenAI, GPT-4o is a multilingual, multimodal LLM, which accepts input and generates output as both text and images. In addition to having a textual conversation, GPT-4o can describe images, summarize text, understand diagrams etc. It is available through an API for enterprise customizations and fine-tuning.

GPT-4o’s performance on text evaluation against other top LLMs (Source: OpenAI)

This model is great for generalized enterprise applications across:

Creating text content in 50 languages

Editing documents, summarizing information, creating action items etc.

Data analysis and creating tables, graphs, diagrams and reports

Code generation or identifying errors during programming

Accepting voice input for various tasks

Gemini

Gemini is Google’s multimodal LLM, which powers the eponymous chatbot. Gemini Ultra is the largest and most capable version available. However, Google also offers Nano, which is better suited for on-device tasks. Google claims that this model outperforms GPT-4 on several fronts.

Gemini is great for applications that need:

Native multimodal capabilities across image, text and audio

Extracting information from images/video or writing descriptions

Programming in languages Python, Java, C++, and Go

Scaling on Google Cloud infrastructure

Llama

Meta’s Large Language Model Meta AI (Llama) 3 is one of the largest foundational models today. The kicker is that it’s open source. Available in 8 billion, 70 billion and 405 billion parameter variations, Llama offers choices for speed, performance, and scalability.

Fundamentally, Llama is a great option for enterprises looking to take the open-source route to GenAI. But that’s not all.

For coding use cases, Llama is best in class, only marginally outperformed by proprietary models

Organizations can run it on their own cloud or on-prem infra, giving complete control and predictability

Llama lends itself well to domain-specific fine-tuning

It is also optimized for tool use, which means you can integrate it into your own tech stack and work on it more effortlessly than proprietary models

Mixtral

Built by the French company Mistral AI, the Mixtral models are also open. Using a ‘mixture of experts’ architecture, this model handles five languages—English, French, Italian, German and Spanish. It is available on an Apache License.

The Mistral suite of models are great for:

Text classification and sentiment analysis

Complex tasks that need expertise across multiple areas

Benchmarking other proprietary models you’re considering

The above is just the tip of the LLM iceberg. Anthropic’s Claude, IBM’s Granite, Baidu’s Ernie, Google’s LaMDA, PaLM, Amazon’s AlexaTM etc. are all popular proprietary models. At the open source end, NVidia’s Nemotron, Databricks’ DBRS, Google’s BERT, X (previously known as Twitter)’s Grok are popular examples.

Domain-specific LLMs

While the largest LLMs today have general knowledge across various industries and domains, enterprises need intelligence in their specific line of work. This is where domain-specific LLMs come in handy. Some of the most popular ones are listed below.

BloombergGPT: This proprietary model is purpose-built for finance on Bloomberg’s archive of financial language documents spanning their 40-year history. It is useful in performing finance tasks, understanding annual reports, generating summaries of investment statements, etc. KAI-GPT and FinGPT are other financial models available.

Med-PaLM: Google’s model designed for the healthcare industry, Med-PaLM 2 is the first to reach the levels of a human expert in answering USMLE-style questions. It is great for writing long-form answers to medical questions. However, various upgrades to Med-PaLM 2 also enable multi-modal interactions, better suited for radiology, pathology, etc.

Various emerging models like ClinicalBERT, ClimateBERT, LawBERT, etc. address the needs of their respective domains.

As an early adopter, you may or may not want to know the nitty-gritty of the LLM you’re using. However, you’ll certainly want to know how to use it, which brings us to the next set of tools.

Interfaces

An interface, chatbot or AI assistant is the tool through which you use a large language model. It is the conversational interface where you insert your instructions and receive corresponding output.

ChatGPT

The most popular GenAI interface available today, ChatGPT, is powered by the GPT suite of models by OpenAI. It is free with some limitations. A paid version called ChatGPT Plus is also available, with the image generation model DALL-E integrated.

Microsoft Copilot

Developed by Microsoft, Copilot also uses OpenAI’s models. This interface looks and feels like ChatGPT, performing somewhat similar functions in similar ways.

Google’s Gemini, IBM’s Granite, Claude Sonnet have their own chatbot interfaces as well.

Tune Chat

Screenshot of a conversation with Tune Chat with choice of various LLMs

TuneAI’s assistant, Tune Chat is versatile, user-friendly and powerful. As an independent chatbot, it isn’t restricted to any particular LLM. In fact, Tune Chat allows you to:

Choose from one of 17 proprietary and open source models

Switch between models within the same conversation

Bring your own customized models

In addition to these chatbots, there are also integrated AI interfaces within existing products. That comes next.

Integrated AI

For starters, if you Google anything today, you’ll see a summary-type section at the top of search results called ‘AI overviews,’ which is exemplary of integrated AI. In addition to search, Google’s LLM Gemini’s capabilities are integrated across Workspace products like Gmail, Drive, Calendar, etc.

Some of the other most popular integrated GenAI tools are:

Salesforce Einstein

The CRM integrates AI into each organization’s customer data to glean insights, automate workflows, summarize calls/emails, create communication material, etc. You can also set up Agentforce, an external-facing chatbot to resolve customer issues.

GitHub Copilot

Pitched as the “AI pair programmer,” GitHub Copilot acts as a support system for developers. It provides suggestions for code completion, descriptions, reviews, documentation, etc.

Canva Magic Studio

The design software’s GenAI tool helps users create visual media, including video, based on textual input. It also allows users to edit/upgrade existing images with Magic Grab, Magic Edit, Magic Animate, etc.

Last, but arguably the most important, tool in your armor is the fine-tuning stack. As GenAI evolves, your singular competitive differentiator will be the intelligence from your own data. Fine-tuning helps leverage exactly that.

Fine tuning

Simply put, fine-tuning is the process of training a pre-trained model on data that is specific to a domain, organization, customer or use case. This dramatically improves accuracy, efficiency and performance—thereby reducing costs. Some of the most common fine-tuning tools are as follows.

Annotation tools: Basic AI, Labelbox, SuperAnnotate, etc. are tools that allow you to annotate data that you’re using in training your LLMs.

Training tools: Products like Databricks Lakehouse and Amazon SageMaker offer the platform for distributed training.

Fine-tuning platforms: Comprehensive fine-tuning platforms like Tune Studio enable you to try any LLM, save interactions as high-quality datasets, train on top-tier hardware, optimize performance, and deploy to the infra of your choice!

You’ve seen a bunch of enterprise GenAI tools above. So, how do you know which one is right for you? Let’s find out.

How to choose the right GenAI tools

While choosing off-the-shelf products for your enterprise GenAI applications, you have a number of considerations to keep in mind. Here’s everything you need to ask before making your decisions.

1. How much does it cost?

Let’s face it: Generative AI is expensive. Across model costs, computing, maintenance, data storage, deployment, fine-tuning, etc., your GenAI implementation can quickly spiral out of control.

To avoid that, before you choose a tool, clearly understand what the total cost of ownership is.

Forecast TCO for any usage-based pricing your model provider might use, choose open source models for better cost optimization

Compare costs for on-prem and cloud infrastructure; the cloud’s infinite scaling might turn into a nightmare if you’re new to MLOps

Consider the cost of fine-tuning, which is often a people-intensive phase; include hiring costs into your budgets

Read more on unlocking value from GenAI on a budget.

2. How does it work?

Consider aspects of accuracy, efficiency, and performance for all tools you’re choosing.

General vs. specific models: You don’t need large general intelligence models for all applications. For instance, if you’re deploying a chatbot for investment bankers to write regular reports, you might want to choose an LLM designed for the financial domain. This has the potential to be more accurate at lower costs.

Small vs. large models: For most specific tasks, like customer service or analytical reporting, a fine-tuned small model is much better than a general purpose large model.

SaaS vs. customized: For creating content or visuals, you might prefer a SaaS tool like Jasper or Canva. However, for tasks that involve your intellectual property, you might need a customized implementation on your private cloud or on-prem. For instance, if you need data indexing for your internal resources, you might be better off with a customized solution.

3. How does it treat your proprietary data?

Speaking of usage policy for GenAI, while using off-the-shelf products like ChatGPT, Gartner warns organizations against inputting any personally identifiable information, sensitive information or company IP.

However, this limits the leverage you can gain from Generative AI as a whole. To build sustainable competitive advantage, choose tools that allow you to train models on your IP without losing your data. Open source LLMs, trained and deployed on your own infrastructure, are your best bet in this regard.

On the other hand, also consider the IP protection on content created by these GenAI tools. For instance, if you’re using ChatGPT (or similar tool) to write your blog posts, who holds its copyright?

4. How secure is the tool?

Ask your GenAI software vendor to share features of data security, privacy, encryption, authentication, authorization and access control embedded in the product. If you’re in highly regulated industries like healthcare or finance, also seek information about their compliance posture.

All of Tune AI’s products use data encryption, are GDPR/CCPA/HIPAA compliant, and SOC2 and ISO27001 certified.

5. What skills do you need for using the tool?

It would be easy to subscribe to a fine-tuning tool, but actually customizing the model might need skills in data science, machine learning, MLOps and more. So, before choosing a tool, look at what skills and capabilities you need to use it well.

6. How does it meet my needs?

Most critically, ensure that the tools you choose meet your needs to a T. Define your use cases, outline your goals, identify beta users, etc. to ensure you can reap ROI on your GenAI efforts. For some foundation on such strategic decisions, read our blog post on choosing the right LLMs for enterprise AI.

Alternatively, choose an end-to-end solution provider like Tune AI, who bring experts to use Tune Studio to fine-tune your models and make them available through a custom implementation of Tune Chat.

Written by

Anshuman Pandey

Co-founder and CEO