Enterprise

GenAI Governance Framework for Enterprises

Oct 30, 2024

5 min read

Everything you need for efficient, cost-effective, sustainable, ethical, and responsible AI.

For a more detailed approach to AI governance, consider The National Institute of Standards and Technology (NIST) Risk Management Framework. Its 142-page playbook offers a granular and action-oriented approach to setting up GenAI governance.

“When you think about AI, think about electricity. It can electrocute people, it can spark dangerous fires, but today, I think none of us would give up heat, refrigeration and lighting for fear of electrocution. And, I think, so too would be for AI.” ~ Andrew Ng, Deeplearning.AI

What Andrew is pointing out is that every technology comes with a great number of risks and potential for misuse. This is true of AI as well. We’re already seeing deep fake videos, biased results, hallucination, model theft, so on and so forth. These risks are only going to compound in the near future.

However, like every disruptive technology since the printing press, the opportunities and possibilities are too high for the human race to give in to the fears.

One of the most strategic, sustainable, and effective ways to manage the risks of Generative AI is governance.

In this ebook, we explore the what, why, when and how of building the Generative AI governance framework in your organization.

What is Generative AI governance?

Generative AI governance is a set of systems, processes, practices, and guardrails designed to enable responsible use of artificial intelligence. In essence, these are the systems that help maximize value from GenAI without compromising on safety, sustainability, and ethical practice.

A good AI governance framework comprises guardrails for all aspects of the ecosystem, such as:

Infrastructure: The cloud/on-prem infrastructure that enables GenAI applications

Data: All the first-party, second-party and third-party data that is used to train these GenAI applications

Models: The structure and accuracy output created by GenAI models

Interfaces: The application interfaces that users interact with to generate content with GenAI

Why do organizations need an independent GenAI governance?

Most enterprises today already have governance frameworks in place for IT, data, etc. Why then, do they need a separate one for GenAI? Well, we’re glad you asked.

Compliance

Worldwide, law governing AI applications are rapidly evolving. The Biden administration has released an executive order suggesting a comprehensive framework for AI governance. The EU AI Act has similar, yet stricter, requirements for the use of AI technologies. Soon, AI governance would become a compliance status quo.

Security

AI takes and returns data into various organizational systems like the customer relationship management (CRM), enterprise resource planning (ERP) or financial tools. Protecting this data from malicious actors needs a security-focused approach to AI governance.

By the way, here’s our take on why you should choose on-prem implementation if you have security-intensive applications.

Customer trust

For any GenAI product to be successful, it needs to have customer trust. Users need to believe that the results provided by GenAI are accurate and unbiased. AI governance ensures exactly that. On the other hand, AI must also protect users against any violations of human rights or dignity.

Continuous oversight

AI models evolve. An algorithm that was safe and accurate today might drift into unpleasant territory. An independent GenAI governance framework ensures that every model meets quality, reliability and ethical standards on a consistent basis.

Risk management

Generative AI is new with high potential for mistakes and misuse. Google Deep Mind identifies ‘impersonation’ as the most common category of misuse, which creates never-seen-before risks for enterprises. For instance, anyone can impersonate your representatives more accurately and scam your customers, resulting in financial and reputational damage.

In summary, every enterprise looking to use Generative AI needs to set up a governance framework proactively.

When is a good time to set up a generative AI governance framework?

For governance to function effectively, it needs to be set up before adopting Generative AI. However, what counts as GenAI adoption is often under question. Here are some scenarios.

Before any of your employees try ChatGPT or any other GenAI enabled tool

Before you use any of your personal data as part of your prompt to a GenAI tool

Before you integrate GenAI into any of your enterprise tools, like a CRM or content management platform

Before you create your pilot GenAI project

The best time to set up governance was before any of your team members ever touched an AI-powered tool. The second best time is now.

How to create a comprehensive GenAI governance framework?

The governance needs of every organization are different. Depending on your infra, data, use cases, and stakeholders, you’ll need a customized governance framework. However, there are a few best practices that can help all organizations. We offer them below.

Step 1: Paint your vision for GenAI

For every governance framework to succeed, it needs to start with why. So, begin by defining the purpose of your GenAI strategy. Show the reader (i.e., stakeholder) what GenAI can do for them.

Goals: Set measurable and meaningful targets for your GenAI implementation. For example, your goal could be a 20% increase in developer productivity or a 10% increase in time to market.

Principles: Outline the ethical principles that your team will abide by during the design, development, deployment and use of GenAI. You might include fairness, transparency, auditability, human-first approach, etc.

Recommendations: Proactively communicate your vision to every member in the organization. As a completely new framework, people might struggle to apply your vision to practice. So, offer practical examples and recommendations.

Step 2: Create a governance structure

This is the step where you put together the right team to create and implement your GenAI governance framework.

Steering committee: To give strategic direction and make important decisions, create a steering committee with department heads and relevant senior leaders.

Cross-functional strategy teams: Bring together experts from product, engineering, IT, data security, compliance, HR, legal, and any other department to contribute to the creation of your governance framework.

Chief AI officer: Create a senior management position for someone to be accountable for the day-to-day compliance of your governance framework. If you’re just beginning, this can be a part-time responsibility for your CTO or CIO as well.

While setting up your governance structure:

Outline each team’s mandate

Identify every individual member’s roles and responsibilities

Schedule regular meetings for them to review processes and make improvements

Step 3: Assess your risks

Once you have a skeletal structure ready, it’s time to understand your current risk exposure. Bring the cross-functional strategy team to identify risks across the following dimensions.

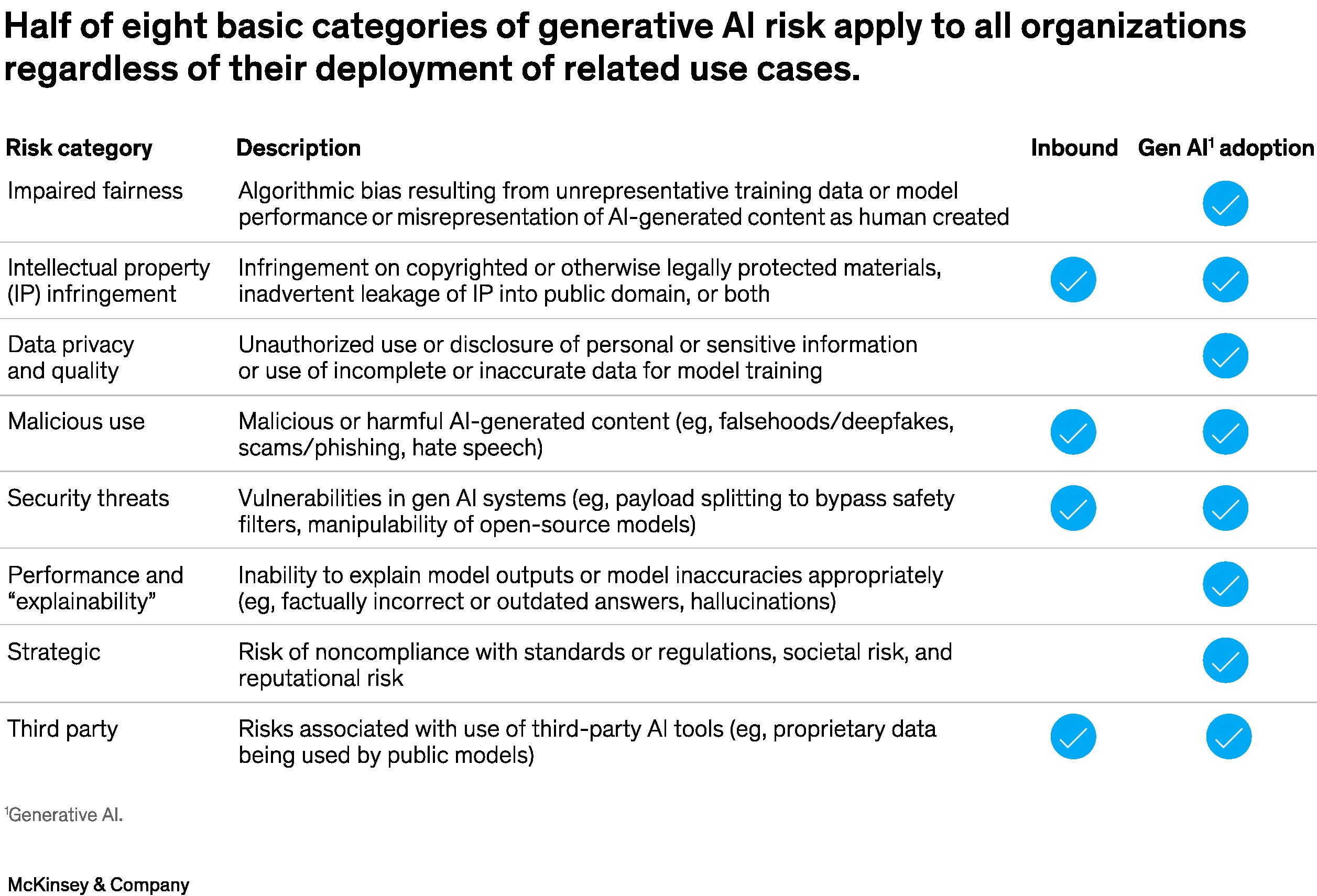

McKinsey outlines eight basic categories of GenAI risks.

GenAI risk categories (source: McKinsey & Company)

In addition to the above, you might run into specific risks applicable to your industry or use case. For instance, the compliance demands around data and GenAI are inordinately high in healthcare. The inadvertent risks of plagiarism might be high with marketing and content management.

Step 4: Plan your GenAI governance and risk management

Assess the likelihood and severity of each risk and build mitigation strategies around them. Some of the key factors for you to consider while building your governance and risk management policies are as follows.

Manage regulatory compliance

Set up processes, guidelines and automation to ensure laws related to data privacy, security, copyright, customer rights, and intellectual property are consistently adhered to.

Enable trustworthy AI

Ensure transparency and auditability of Generative AI’s decision-making. Establish processes to trace the origin and history of training data.

Conduct model evaluations

Build a practice of regularly performing model evaluations. The UK’s AI Safety Institute has a comprehensive open source framework to this.

Set minimum thresholds

Clearly define your boundaries for GenAI. Set up go/no-go policies. Set standards for performance, quality assurance, security, etc. along with a clear escalation matrix.

Design response and remediation

Establish policies in case any of your risks materialize. Have workflows for immediate responses, reporting, stakeholder communication, regulatory declarations, customer alerts, etc.

Schedule regular audits

Schedule meetings with the leadership and governance teams to review the robustness and relevance of the framework. Use KPI-driven dashboards to get an objective view of performance. In this process:

Conduct regular AI systems inventory

Review the number and frequency of issues reported

Measure the effectiveness of the response and remediation mechanisms

Set up human-in-the-loop protocols

Clearly state the protocols for autonomous decisions and human-in-the-loop workflows. Identify the roles and responsibilities of people in the process.

Step 5: Create a culture of responsible GenAI

AI governance won’t be effective if it’s treated as a checkbox. For innovation and business growth using GenAI, its principles and processes need to be imbibed in the very fabric of the organization.

Educate your teams: Conduct workshops, seminars and other trainings to ensure everyone understands the pervasiveness, utility and opportunities of GenAI. Reiterate and refresh these trainings periodically.

Lead from the top: Champion a culture of responsible AI from the top. Lead by example and show team members how to mitigate AI risks.

Build AI talent: Set up training and development programs to upskill employees for the new world. Slowly transition team members to newer roles and tasks.

Take a human-centered approach: Don’t ignore the socio-technical implications of AI. Keep in mind diversity, inclusivity, transparency, and fairness while making AI-related decisions.

Step 6: Continuously improve

GenAI governance is not a one-time event. It is an ongoing activity that involves every single member of your organization. To ensure that your governance is consistently implemented, you need to keep it updated.

Conduct regular reviews of all GenAI systems, processes, and output

Capture user feedback on key performance indicators in real-time

Implement updates to LLMs and tools thoughtfully

Track regulatory advancements in this space to evolve your compliance posture

As you can see from the resources linked above, this governance framework—though thorough—is only a starting point. Depending on the nature of your business, creating a GenAI governance framework would take significant time, effort and specialized skills.

Don’t feel the need to do it all alone. Tune AI’s experts are well-versed in managing diverse LLMs, implementations and use cases with an eye for the right governance to suit your needs.

Get the most important GenAI conversation going. Speak to a Tune AI expert about governance today.

About Tune AI

Tune AI is an enterprise GenAI platform that accelerates enterprise adoption through thoughtful, consultative, ROI-focused implementations. Tune AI’s experts are happy to build, test and launch custom models for your enterprise.

Everything you need for efficient, cost-effective, sustainable, ethical, and responsible AI.

For a more detailed approach to AI governance, consider The National Institute of Standards and Technology (NIST) Risk Management Framework. Its 142-page playbook offers a granular and action-oriented approach to setting up GenAI governance.

“When you think about AI, think about electricity. It can electrocute people, it can spark dangerous fires, but today, I think none of us would give up heat, refrigeration and lighting for fear of electrocution. And, I think, so too would be for AI.” ~ Andrew Ng, Deeplearning.AI

What Andrew is pointing out is that every technology comes with a great number of risks and potential for misuse. This is true of AI as well. We’re already seeing deep fake videos, biased results, hallucination, model theft, so on and so forth. These risks are only going to compound in the near future.

However, like every disruptive technology since the printing press, the opportunities and possibilities are too high for the human race to give in to the fears.

One of the most strategic, sustainable, and effective ways to manage the risks of Generative AI is governance.

In this ebook, we explore the what, why, when and how of building the Generative AI governance framework in your organization.

What is Generative AI governance?

Generative AI governance is a set of systems, processes, practices, and guardrails designed to enable responsible use of artificial intelligence. In essence, these are the systems that help maximize value from GenAI without compromising on safety, sustainability, and ethical practice.

A good AI governance framework comprises guardrails for all aspects of the ecosystem, such as:

Infrastructure: The cloud/on-prem infrastructure that enables GenAI applications

Data: All the first-party, second-party and third-party data that is used to train these GenAI applications

Models: The structure and accuracy output created by GenAI models

Interfaces: The application interfaces that users interact with to generate content with GenAI

Why do organizations need an independent GenAI governance?

Most enterprises today already have governance frameworks in place for IT, data, etc. Why then, do they need a separate one for GenAI? Well, we’re glad you asked.

Compliance

Worldwide, law governing AI applications are rapidly evolving. The Biden administration has released an executive order suggesting a comprehensive framework for AI governance. The EU AI Act has similar, yet stricter, requirements for the use of AI technologies. Soon, AI governance would become a compliance status quo.

Security

AI takes and returns data into various organizational systems like the customer relationship management (CRM), enterprise resource planning (ERP) or financial tools. Protecting this data from malicious actors needs a security-focused approach to AI governance.

By the way, here’s our take on why you should choose on-prem implementation if you have security-intensive applications.

Customer trust

For any GenAI product to be successful, it needs to have customer trust. Users need to believe that the results provided by GenAI are accurate and unbiased. AI governance ensures exactly that. On the other hand, AI must also protect users against any violations of human rights or dignity.

Continuous oversight

AI models evolve. An algorithm that was safe and accurate today might drift into unpleasant territory. An independent GenAI governance framework ensures that every model meets quality, reliability and ethical standards on a consistent basis.

Risk management

Generative AI is new with high potential for mistakes and misuse. Google Deep Mind identifies ‘impersonation’ as the most common category of misuse, which creates never-seen-before risks for enterprises. For instance, anyone can impersonate your representatives more accurately and scam your customers, resulting in financial and reputational damage.

In summary, every enterprise looking to use Generative AI needs to set up a governance framework proactively.

When is a good time to set up a generative AI governance framework?

For governance to function effectively, it needs to be set up before adopting Generative AI. However, what counts as GenAI adoption is often under question. Here are some scenarios.

Before any of your employees try ChatGPT or any other GenAI enabled tool

Before you use any of your personal data as part of your prompt to a GenAI tool

Before you integrate GenAI into any of your enterprise tools, like a CRM or content management platform

Before you create your pilot GenAI project

The best time to set up governance was before any of your team members ever touched an AI-powered tool. The second best time is now.

How to create a comprehensive GenAI governance framework?

The governance needs of every organization are different. Depending on your infra, data, use cases, and stakeholders, you’ll need a customized governance framework. However, there are a few best practices that can help all organizations. We offer them below.

Step 1: Paint your vision for GenAI

For every governance framework to succeed, it needs to start with why. So, begin by defining the purpose of your GenAI strategy. Show the reader (i.e., stakeholder) what GenAI can do for them.

Goals: Set measurable and meaningful targets for your GenAI implementation. For example, your goal could be a 20% increase in developer productivity or a 10% increase in time to market.

Principles: Outline the ethical principles that your team will abide by during the design, development, deployment and use of GenAI. You might include fairness, transparency, auditability, human-first approach, etc.

Recommendations: Proactively communicate your vision to every member in the organization. As a completely new framework, people might struggle to apply your vision to practice. So, offer practical examples and recommendations.

Step 2: Create a governance structure

This is the step where you put together the right team to create and implement your GenAI governance framework.

Steering committee: To give strategic direction and make important decisions, create a steering committee with department heads and relevant senior leaders.

Cross-functional strategy teams: Bring together experts from product, engineering, IT, data security, compliance, HR, legal, and any other department to contribute to the creation of your governance framework.

Chief AI officer: Create a senior management position for someone to be accountable for the day-to-day compliance of your governance framework. If you’re just beginning, this can be a part-time responsibility for your CTO or CIO as well.

While setting up your governance structure:

Outline each team’s mandate

Identify every individual member’s roles and responsibilities

Schedule regular meetings for them to review processes and make improvements

Step 3: Assess your risks

Once you have a skeletal structure ready, it’s time to understand your current risk exposure. Bring the cross-functional strategy team to identify risks across the following dimensions.

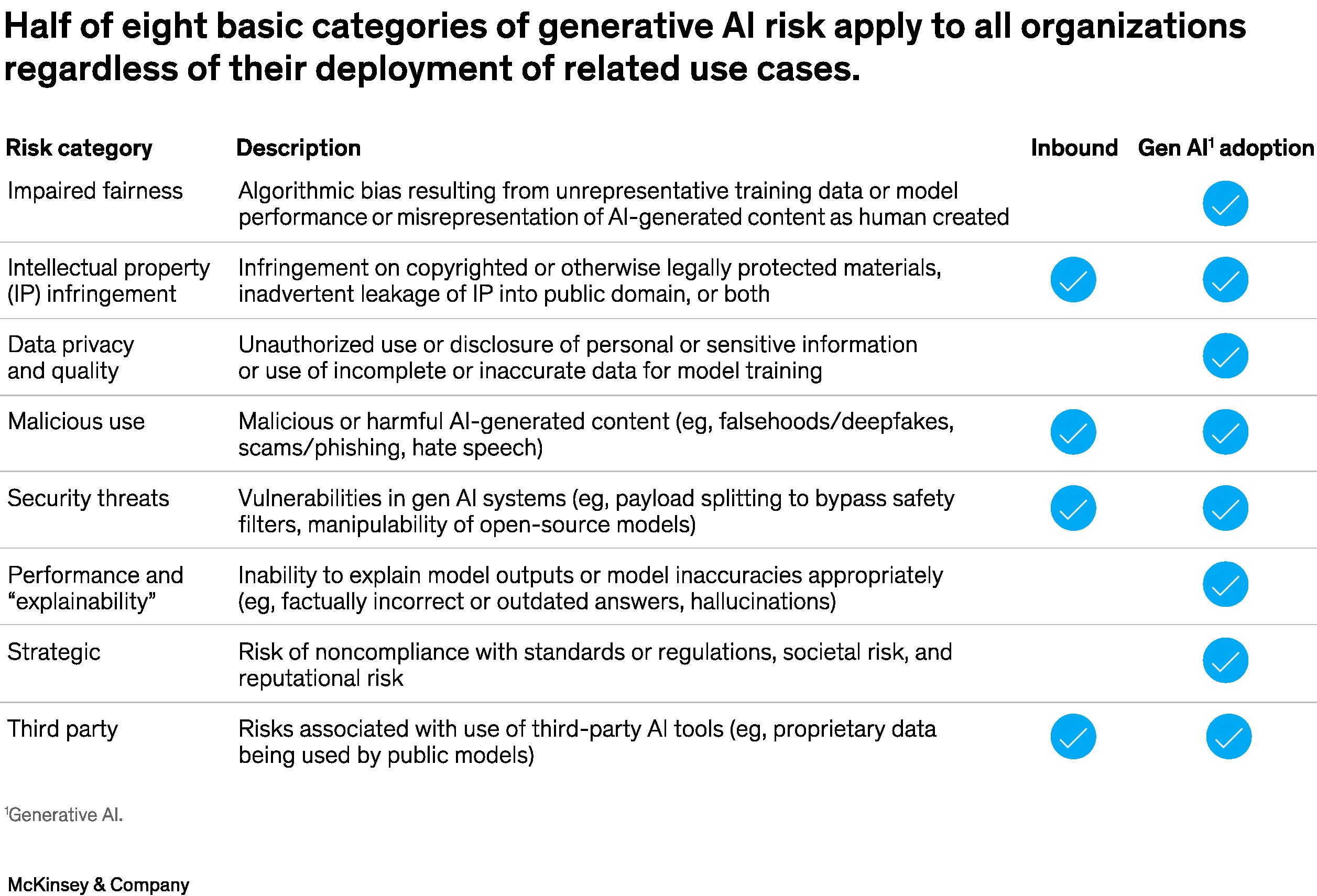

McKinsey outlines eight basic categories of GenAI risks.

GenAI risk categories (source: McKinsey & Company)

In addition to the above, you might run into specific risks applicable to your industry or use case. For instance, the compliance demands around data and GenAI are inordinately high in healthcare. The inadvertent risks of plagiarism might be high with marketing and content management.

Step 4: Plan your GenAI governance and risk management

Assess the likelihood and severity of each risk and build mitigation strategies around them. Some of the key factors for you to consider while building your governance and risk management policies are as follows.

Manage regulatory compliance

Set up processes, guidelines and automation to ensure laws related to data privacy, security, copyright, customer rights, and intellectual property are consistently adhered to.

Enable trustworthy AI

Ensure transparency and auditability of Generative AI’s decision-making. Establish processes to trace the origin and history of training data.

Conduct model evaluations

Build a practice of regularly performing model evaluations. The UK’s AI Safety Institute has a comprehensive open source framework to this.

Set minimum thresholds

Clearly define your boundaries for GenAI. Set up go/no-go policies. Set standards for performance, quality assurance, security, etc. along with a clear escalation matrix.

Design response and remediation

Establish policies in case any of your risks materialize. Have workflows for immediate responses, reporting, stakeholder communication, regulatory declarations, customer alerts, etc.

Schedule regular audits

Schedule meetings with the leadership and governance teams to review the robustness and relevance of the framework. Use KPI-driven dashboards to get an objective view of performance. In this process:

Conduct regular AI systems inventory

Review the number and frequency of issues reported

Measure the effectiveness of the response and remediation mechanisms

Set up human-in-the-loop protocols

Clearly state the protocols for autonomous decisions and human-in-the-loop workflows. Identify the roles and responsibilities of people in the process.

Step 5: Create a culture of responsible GenAI

AI governance won’t be effective if it’s treated as a checkbox. For innovation and business growth using GenAI, its principles and processes need to be imbibed in the very fabric of the organization.

Educate your teams: Conduct workshops, seminars and other trainings to ensure everyone understands the pervasiveness, utility and opportunities of GenAI. Reiterate and refresh these trainings periodically.

Lead from the top: Champion a culture of responsible AI from the top. Lead by example and show team members how to mitigate AI risks.

Build AI talent: Set up training and development programs to upskill employees for the new world. Slowly transition team members to newer roles and tasks.

Take a human-centered approach: Don’t ignore the socio-technical implications of AI. Keep in mind diversity, inclusivity, transparency, and fairness while making AI-related decisions.

Step 6: Continuously improve

GenAI governance is not a one-time event. It is an ongoing activity that involves every single member of your organization. To ensure that your governance is consistently implemented, you need to keep it updated.

Conduct regular reviews of all GenAI systems, processes, and output

Capture user feedback on key performance indicators in real-time

Implement updates to LLMs and tools thoughtfully

Track regulatory advancements in this space to evolve your compliance posture

As you can see from the resources linked above, this governance framework—though thorough—is only a starting point. Depending on the nature of your business, creating a GenAI governance framework would take significant time, effort and specialized skills.

Don’t feel the need to do it all alone. Tune AI’s experts are well-versed in managing diverse LLMs, implementations and use cases with an eye for the right governance to suit your needs.

Get the most important GenAI conversation going. Speak to a Tune AI expert about governance today.

About Tune AI

Tune AI is an enterprise GenAI platform that accelerates enterprise adoption through thoughtful, consultative, ROI-focused implementations. Tune AI’s experts are happy to build, test and launch custom models for your enterprise.

Everything you need for efficient, cost-effective, sustainable, ethical, and responsible AI.

For a more detailed approach to AI governance, consider The National Institute of Standards and Technology (NIST) Risk Management Framework. Its 142-page playbook offers a granular and action-oriented approach to setting up GenAI governance.

“When you think about AI, think about electricity. It can electrocute people, it can spark dangerous fires, but today, I think none of us would give up heat, refrigeration and lighting for fear of electrocution. And, I think, so too would be for AI.” ~ Andrew Ng, Deeplearning.AI

What Andrew is pointing out is that every technology comes with a great number of risks and potential for misuse. This is true of AI as well. We’re already seeing deep fake videos, biased results, hallucination, model theft, so on and so forth. These risks are only going to compound in the near future.

However, like every disruptive technology since the printing press, the opportunities and possibilities are too high for the human race to give in to the fears.

One of the most strategic, sustainable, and effective ways to manage the risks of Generative AI is governance.

In this ebook, we explore the what, why, when and how of building the Generative AI governance framework in your organization.

What is Generative AI governance?

Generative AI governance is a set of systems, processes, practices, and guardrails designed to enable responsible use of artificial intelligence. In essence, these are the systems that help maximize value from GenAI without compromising on safety, sustainability, and ethical practice.

A good AI governance framework comprises guardrails for all aspects of the ecosystem, such as:

Infrastructure: The cloud/on-prem infrastructure that enables GenAI applications

Data: All the first-party, second-party and third-party data that is used to train these GenAI applications

Models: The structure and accuracy output created by GenAI models

Interfaces: The application interfaces that users interact with to generate content with GenAI

Why do organizations need an independent GenAI governance?

Most enterprises today already have governance frameworks in place for IT, data, etc. Why then, do they need a separate one for GenAI? Well, we’re glad you asked.

Compliance

Worldwide, law governing AI applications are rapidly evolving. The Biden administration has released an executive order suggesting a comprehensive framework for AI governance. The EU AI Act has similar, yet stricter, requirements for the use of AI technologies. Soon, AI governance would become a compliance status quo.

Security

AI takes and returns data into various organizational systems like the customer relationship management (CRM), enterprise resource planning (ERP) or financial tools. Protecting this data from malicious actors needs a security-focused approach to AI governance.

By the way, here’s our take on why you should choose on-prem implementation if you have security-intensive applications.

Customer trust

For any GenAI product to be successful, it needs to have customer trust. Users need to believe that the results provided by GenAI are accurate and unbiased. AI governance ensures exactly that. On the other hand, AI must also protect users against any violations of human rights or dignity.

Continuous oversight

AI models evolve. An algorithm that was safe and accurate today might drift into unpleasant territory. An independent GenAI governance framework ensures that every model meets quality, reliability and ethical standards on a consistent basis.

Risk management

Generative AI is new with high potential for mistakes and misuse. Google Deep Mind identifies ‘impersonation’ as the most common category of misuse, which creates never-seen-before risks for enterprises. For instance, anyone can impersonate your representatives more accurately and scam your customers, resulting in financial and reputational damage.

In summary, every enterprise looking to use Generative AI needs to set up a governance framework proactively.

When is a good time to set up a generative AI governance framework?

For governance to function effectively, it needs to be set up before adopting Generative AI. However, what counts as GenAI adoption is often under question. Here are some scenarios.

Before any of your employees try ChatGPT or any other GenAI enabled tool

Before you use any of your personal data as part of your prompt to a GenAI tool

Before you integrate GenAI into any of your enterprise tools, like a CRM or content management platform

Before you create your pilot GenAI project

The best time to set up governance was before any of your team members ever touched an AI-powered tool. The second best time is now.

How to create a comprehensive GenAI governance framework?

The governance needs of every organization are different. Depending on your infra, data, use cases, and stakeholders, you’ll need a customized governance framework. However, there are a few best practices that can help all organizations. We offer them below.

Step 1: Paint your vision for GenAI

For every governance framework to succeed, it needs to start with why. So, begin by defining the purpose of your GenAI strategy. Show the reader (i.e., stakeholder) what GenAI can do for them.

Goals: Set measurable and meaningful targets for your GenAI implementation. For example, your goal could be a 20% increase in developer productivity or a 10% increase in time to market.

Principles: Outline the ethical principles that your team will abide by during the design, development, deployment and use of GenAI. You might include fairness, transparency, auditability, human-first approach, etc.

Recommendations: Proactively communicate your vision to every member in the organization. As a completely new framework, people might struggle to apply your vision to practice. So, offer practical examples and recommendations.

Step 2: Create a governance structure

This is the step where you put together the right team to create and implement your GenAI governance framework.

Steering committee: To give strategic direction and make important decisions, create a steering committee with department heads and relevant senior leaders.

Cross-functional strategy teams: Bring together experts from product, engineering, IT, data security, compliance, HR, legal, and any other department to contribute to the creation of your governance framework.

Chief AI officer: Create a senior management position for someone to be accountable for the day-to-day compliance of your governance framework. If you’re just beginning, this can be a part-time responsibility for your CTO or CIO as well.

While setting up your governance structure:

Outline each team’s mandate

Identify every individual member’s roles and responsibilities

Schedule regular meetings for them to review processes and make improvements

Step 3: Assess your risks

Once you have a skeletal structure ready, it’s time to understand your current risk exposure. Bring the cross-functional strategy team to identify risks across the following dimensions.

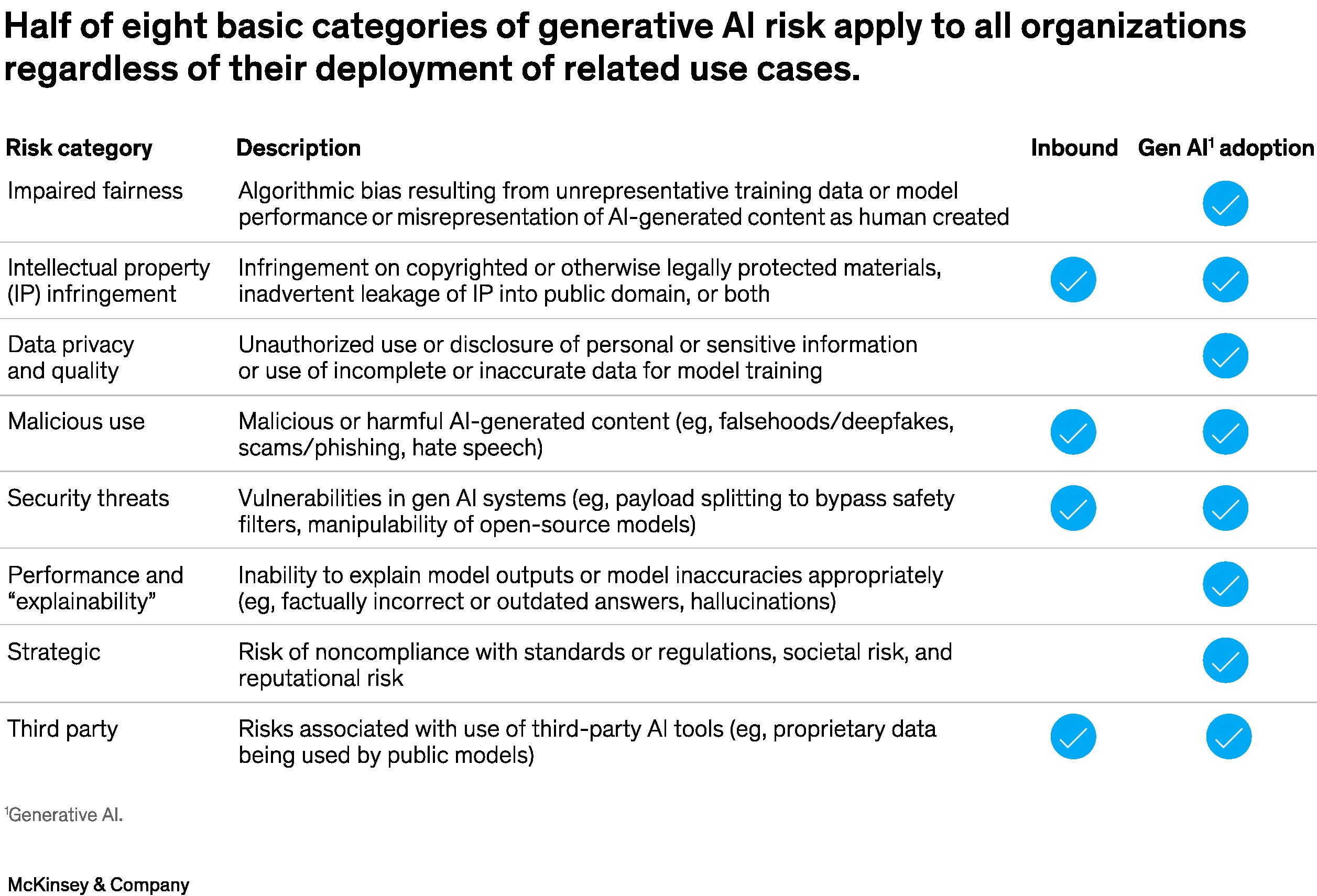

McKinsey outlines eight basic categories of GenAI risks.

GenAI risk categories (source: McKinsey & Company)

In addition to the above, you might run into specific risks applicable to your industry or use case. For instance, the compliance demands around data and GenAI are inordinately high in healthcare. The inadvertent risks of plagiarism might be high with marketing and content management.

Step 4: Plan your GenAI governance and risk management

Assess the likelihood and severity of each risk and build mitigation strategies around them. Some of the key factors for you to consider while building your governance and risk management policies are as follows.

Manage regulatory compliance

Set up processes, guidelines and automation to ensure laws related to data privacy, security, copyright, customer rights, and intellectual property are consistently adhered to.

Enable trustworthy AI

Ensure transparency and auditability of Generative AI’s decision-making. Establish processes to trace the origin and history of training data.

Conduct model evaluations

Build a practice of regularly performing model evaluations. The UK’s AI Safety Institute has a comprehensive open source framework to this.

Set minimum thresholds

Clearly define your boundaries for GenAI. Set up go/no-go policies. Set standards for performance, quality assurance, security, etc. along with a clear escalation matrix.

Design response and remediation

Establish policies in case any of your risks materialize. Have workflows for immediate responses, reporting, stakeholder communication, regulatory declarations, customer alerts, etc.

Schedule regular audits

Schedule meetings with the leadership and governance teams to review the robustness and relevance of the framework. Use KPI-driven dashboards to get an objective view of performance. In this process:

Conduct regular AI systems inventory

Review the number and frequency of issues reported

Measure the effectiveness of the response and remediation mechanisms

Set up human-in-the-loop protocols

Clearly state the protocols for autonomous decisions and human-in-the-loop workflows. Identify the roles and responsibilities of people in the process.

Step 5: Create a culture of responsible GenAI

AI governance won’t be effective if it’s treated as a checkbox. For innovation and business growth using GenAI, its principles and processes need to be imbibed in the very fabric of the organization.

Educate your teams: Conduct workshops, seminars and other trainings to ensure everyone understands the pervasiveness, utility and opportunities of GenAI. Reiterate and refresh these trainings periodically.

Lead from the top: Champion a culture of responsible AI from the top. Lead by example and show team members how to mitigate AI risks.

Build AI talent: Set up training and development programs to upskill employees for the new world. Slowly transition team members to newer roles and tasks.

Take a human-centered approach: Don’t ignore the socio-technical implications of AI. Keep in mind diversity, inclusivity, transparency, and fairness while making AI-related decisions.

Step 6: Continuously improve

GenAI governance is not a one-time event. It is an ongoing activity that involves every single member of your organization. To ensure that your governance is consistently implemented, you need to keep it updated.

Conduct regular reviews of all GenAI systems, processes, and output

Capture user feedback on key performance indicators in real-time

Implement updates to LLMs and tools thoughtfully

Track regulatory advancements in this space to evolve your compliance posture

As you can see from the resources linked above, this governance framework—though thorough—is only a starting point. Depending on the nature of your business, creating a GenAI governance framework would take significant time, effort and specialized skills.

Don’t feel the need to do it all alone. Tune AI’s experts are well-versed in managing diverse LLMs, implementations and use cases with an eye for the right governance to suit your needs.

Get the most important GenAI conversation going. Speak to a Tune AI expert about governance today.

About Tune AI

Tune AI is an enterprise GenAI platform that accelerates enterprise adoption through thoughtful, consultative, ROI-focused implementations. Tune AI’s experts are happy to build, test and launch custom models for your enterprise.

Written by

Anshuman Pandey

Co-founder and CEO