LLMs

SLoRA: Efficient Fine-Tuning for Large Language Models

Jul 29, 2024

5 min read

You're a passionate AI developer, eager to try to utilize the power of large language models (LLMs) for your latest project. You've got brilliant ideas, but there's a catch – fine-tuning these massive models feels like trying to parallel park a cruise ship in a crowded marina. It's resource-intensive, time-consuming, and frankly, a bit overwhelming.

Sound familiar? You're not alone in this boat.

Before we dive deeper, let's clarify what we mean by fine-tuning:

Fine-tuning is the process of further training a pre-trained model on a specific task or dataset to adapt its knowledge for a particular application.

The world of AI has been grappling with a significant challenge: how to efficiently fine-tune LLMs without breaking the bank or melting your hardware – we all are GPU poor except NVIDIA. Traditional fine-tuning methods are like using a sledgehammer to crack a nut, – they get the job done, but at what cost?

Enter SLoRA – Sparse Low-Rank Adaptation, – the unsung hero for efficient model fine-tuning.

Fine-tuning large language models (LLMs) has become a crucial step in achieving state-of-the-art results in various natural language processing (NLP) tasks. However, this process often comes with significant computational costs, memory requirements, and time constraints. In this article, we'll explore SLoRA, a novel approach to efficient model fine-tuning that promises to revolutionise the way we work with LLMs. By leveraging sparse low-rank adaptation, SLoRA offers a faster, more cost-effective, and more sustainable way to fine-tune LLMs without sacrificing performance.

What is SLoRA?

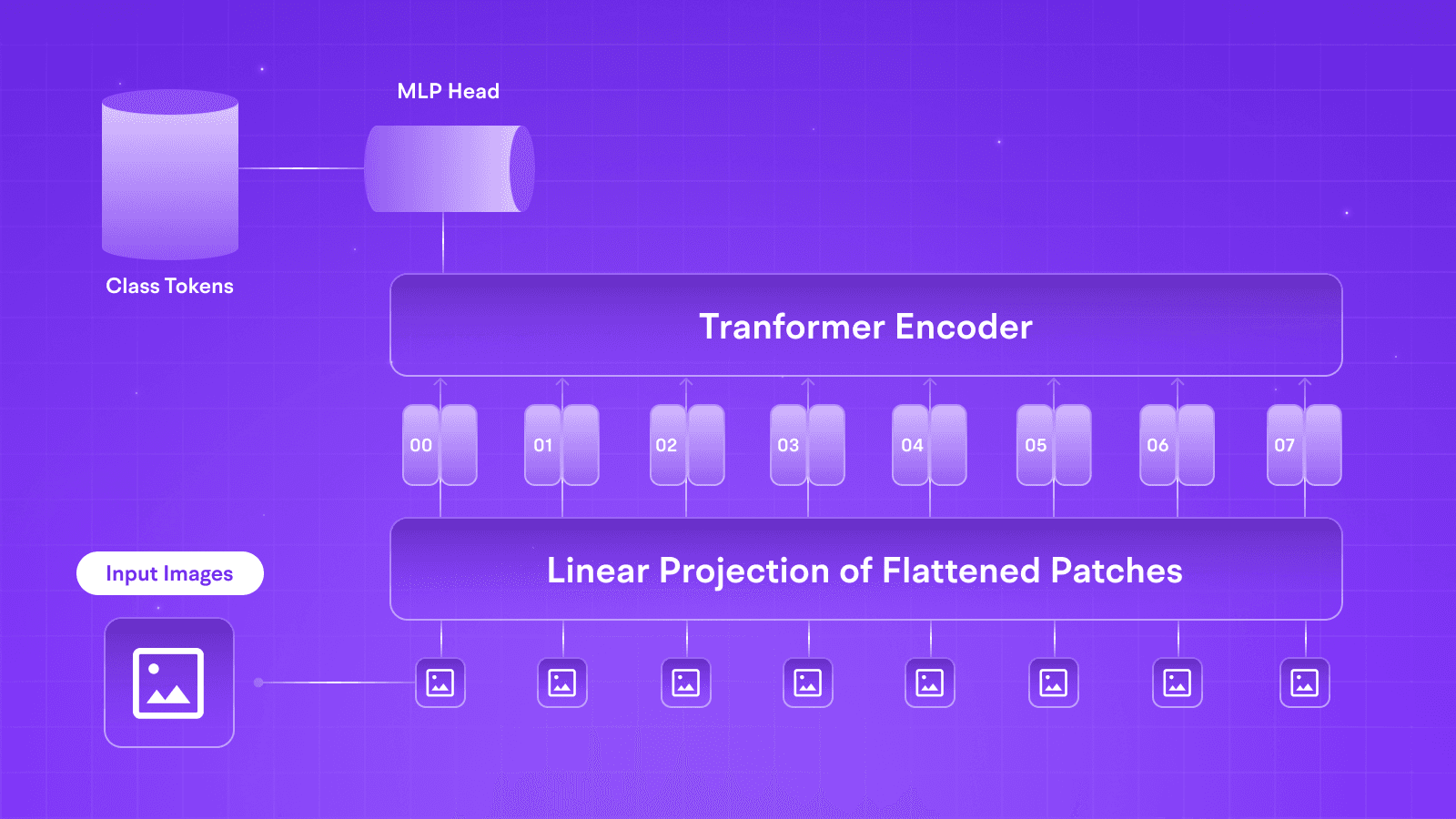

To understand SLoRA, it's essential to first grasp the concept of Low-Rank Adaptation (LoRA). LoRA is a parameter-efficient fine-tuning method that updates only a small subset of model parameters while keeping the rest frozen. This approach has shown remarkable success in reducing computational costs and memory requirements for model fine-tuning.

However, LoRA still updates a relatively large number of parameters, which can be computationally expensive and memory-intensive. This is where SLoRA comes in – by introducing sparsity into the LoRA framework, SLoRA further reduces the number of updated parameters to approximately 1% of the original model's parameters. This drastic reduction in updated parameters leads to significant computational savings and faster convergence rates

SLoRA stands for Sparse Low-Rank Adaptation, a method designed to enhance the efficiency of fine-tuning LLMs. It builds on the concept of LoRA, which constrains the update of pre-trained weights using low-rank decomposition. SLoRA introduces sparsity into this approach, focusing only on a subset of parameters that significantly impact the model's performance.

Here's a simple analogy -- Imagine you have a giant jigsaw puzzle. LoRA would focus on updating a specific section of the puzzle, while SLoRA would pinpoint only the most important pieces within that section.

How SLoRA Works

SLoRA employs a sparse matrix approach where the weight updates are constrained to a low-rank format and further sparsified. This involves decomposing the weight matrix into a product of two smaller matrices and applying updates only to a sparse subset of the original parameters. This method reduces the density of updates to about 1%, significantly cutting down on the resources needed for training.

Think of a weight matrix in an LLM as a giant grid of numbers. These numbers represent the connections between different parts of the model. SLoRA uses a technique called sparse matrix decomposition to break down this grid into smaller, more manageable pieces.

Imagine slicing a pizza into smaller triangles. SLoRA only updates the toppings on a select few of those triangles, leaving the rest untouched. This dramatically reduces the amount of data we need to process and store.

Comparison with Traditional Fine-Tuning Methods

Traditional fine-tuning involves updating all model parameters, which is resource-intensive and time-consuming. In contrast, SLoRA updates only a small, significant subset of parameters, achieving similar performance with much lower computational overhead.

SLoRA in the nutshell:

SLoRA builds on the foundation of LoRA (Low-Rank Adaptation), which already constraints updates to a small subset of parameters.

It takes this a step further by introducing sparsity – focusing on an even smaller, more crucial set of parameters.

The result? You're updating only about 1% of the model's parameters, dramatically reducing computational load and memory requirements.

Benefits?

Reduced Computational Requirements: By updating only a sparse subset of parameters, SLoRA dramatically lowers the computational load.

Faster Fine-Tuning Process: The sparse updates enable quicker convergence, speeding up the fine-tuning process.

Lower Memory Usage: The reduced number of updates translates to lower memory requirements, making it feasible to deploy models on devices with limited memory.

Potential for Improved Model Performance: Efficient parameter updates can lead to models that are not only faster but also potentially more robust and adaptable to specific tasks.

How to use SLoRA in Your Projects:

Initialize the Sparse Matrix: Begin by setting up the sparse matrix with low-rank decomposition.

Apply Sparse Updates: Update only the critical parameters as identified by the sparsity constraints.

Train the Model: Proceed with the fine-tuning process, leveraging the computational efficiency of SLoRA.

For more detailed guide checkout this paper: https://arxiv.org/pdf/2308.06522

SLoRA's Secret Sauce:

Here's where SLoRA really flexes its muscles. By dramatically reducing the number of parameters updated during fine-tuning, SLoRA processes fewer tokens. It's like having a car that can drive the same distance using only a fraction of the fuel.

Fewer tokens processed = Lower costs

Efficient updates = More bang for your buck

Optimized token usage = Stretch your budget further

Let's say you're fine-tuning a model on a specific task. Traditional methods might process millions of tokens, updating every parameter. With SLoRA, you're looking at a fraction of that - potentially cutting your token usage (and thus, your costs)!

Conclusion

SLoRA represents a significant leap forward in efficient model fine-tuning. It empowers developers to unlock the full potential of LLMs while being mindful of resources and costs.

Ready to give SLoRA a try? At Tune AI, we implemented this approach to ,ake models mode accessible and provide an efficient way to fine-tune and serve LLMs.

Head over to Tune Studio and start exploring! Share your experiences and let's build a more accessible and sustainable future for AI together.

You're a passionate AI developer, eager to try to utilize the power of large language models (LLMs) for your latest project. You've got brilliant ideas, but there's a catch – fine-tuning these massive models feels like trying to parallel park a cruise ship in a crowded marina. It's resource-intensive, time-consuming, and frankly, a bit overwhelming.

Sound familiar? You're not alone in this boat.

Before we dive deeper, let's clarify what we mean by fine-tuning:

Fine-tuning is the process of further training a pre-trained model on a specific task or dataset to adapt its knowledge for a particular application.

The world of AI has been grappling with a significant challenge: how to efficiently fine-tune LLMs without breaking the bank or melting your hardware – we all are GPU poor except NVIDIA. Traditional fine-tuning methods are like using a sledgehammer to crack a nut, – they get the job done, but at what cost?

Enter SLoRA – Sparse Low-Rank Adaptation, – the unsung hero for efficient model fine-tuning.

Fine-tuning large language models (LLMs) has become a crucial step in achieving state-of-the-art results in various natural language processing (NLP) tasks. However, this process often comes with significant computational costs, memory requirements, and time constraints. In this article, we'll explore SLoRA, a novel approach to efficient model fine-tuning that promises to revolutionise the way we work with LLMs. By leveraging sparse low-rank adaptation, SLoRA offers a faster, more cost-effective, and more sustainable way to fine-tune LLMs without sacrificing performance.

What is SLoRA?

To understand SLoRA, it's essential to first grasp the concept of Low-Rank Adaptation (LoRA). LoRA is a parameter-efficient fine-tuning method that updates only a small subset of model parameters while keeping the rest frozen. This approach has shown remarkable success in reducing computational costs and memory requirements for model fine-tuning.

However, LoRA still updates a relatively large number of parameters, which can be computationally expensive and memory-intensive. This is where SLoRA comes in – by introducing sparsity into the LoRA framework, SLoRA further reduces the number of updated parameters to approximately 1% of the original model's parameters. This drastic reduction in updated parameters leads to significant computational savings and faster convergence rates

SLoRA stands for Sparse Low-Rank Adaptation, a method designed to enhance the efficiency of fine-tuning LLMs. It builds on the concept of LoRA, which constrains the update of pre-trained weights using low-rank decomposition. SLoRA introduces sparsity into this approach, focusing only on a subset of parameters that significantly impact the model's performance.

Here's a simple analogy -- Imagine you have a giant jigsaw puzzle. LoRA would focus on updating a specific section of the puzzle, while SLoRA would pinpoint only the most important pieces within that section.

How SLoRA Works

SLoRA employs a sparse matrix approach where the weight updates are constrained to a low-rank format and further sparsified. This involves decomposing the weight matrix into a product of two smaller matrices and applying updates only to a sparse subset of the original parameters. This method reduces the density of updates to about 1%, significantly cutting down on the resources needed for training.

Think of a weight matrix in an LLM as a giant grid of numbers. These numbers represent the connections between different parts of the model. SLoRA uses a technique called sparse matrix decomposition to break down this grid into smaller, more manageable pieces.

Imagine slicing a pizza into smaller triangles. SLoRA only updates the toppings on a select few of those triangles, leaving the rest untouched. This dramatically reduces the amount of data we need to process and store.

Comparison with Traditional Fine-Tuning Methods

Traditional fine-tuning involves updating all model parameters, which is resource-intensive and time-consuming. In contrast, SLoRA updates only a small, significant subset of parameters, achieving similar performance with much lower computational overhead.

SLoRA in the nutshell:

SLoRA builds on the foundation of LoRA (Low-Rank Adaptation), which already constraints updates to a small subset of parameters.

It takes this a step further by introducing sparsity – focusing on an even smaller, more crucial set of parameters.

The result? You're updating only about 1% of the model's parameters, dramatically reducing computational load and memory requirements.

Benefits?

Reduced Computational Requirements: By updating only a sparse subset of parameters, SLoRA dramatically lowers the computational load.

Faster Fine-Tuning Process: The sparse updates enable quicker convergence, speeding up the fine-tuning process.

Lower Memory Usage: The reduced number of updates translates to lower memory requirements, making it feasible to deploy models on devices with limited memory.

Potential for Improved Model Performance: Efficient parameter updates can lead to models that are not only faster but also potentially more robust and adaptable to specific tasks.

How to use SLoRA in Your Projects:

Initialize the Sparse Matrix: Begin by setting up the sparse matrix with low-rank decomposition.

Apply Sparse Updates: Update only the critical parameters as identified by the sparsity constraints.

Train the Model: Proceed with the fine-tuning process, leveraging the computational efficiency of SLoRA.

For more detailed guide checkout this paper: https://arxiv.org/pdf/2308.06522

SLoRA's Secret Sauce:

Here's where SLoRA really flexes its muscles. By dramatically reducing the number of parameters updated during fine-tuning, SLoRA processes fewer tokens. It's like having a car that can drive the same distance using only a fraction of the fuel.

Fewer tokens processed = Lower costs

Efficient updates = More bang for your buck

Optimized token usage = Stretch your budget further

Let's say you're fine-tuning a model on a specific task. Traditional methods might process millions of tokens, updating every parameter. With SLoRA, you're looking at a fraction of that - potentially cutting your token usage (and thus, your costs)!

Conclusion

SLoRA represents a significant leap forward in efficient model fine-tuning. It empowers developers to unlock the full potential of LLMs while being mindful of resources and costs.

Ready to give SLoRA a try? At Tune AI, we implemented this approach to ,ake models mode accessible and provide an efficient way to fine-tune and serve LLMs.

Head over to Tune Studio and start exploring! Share your experiences and let's build a more accessible and sustainable future for AI together.

You're a passionate AI developer, eager to try to utilize the power of large language models (LLMs) for your latest project. You've got brilliant ideas, but there's a catch – fine-tuning these massive models feels like trying to parallel park a cruise ship in a crowded marina. It's resource-intensive, time-consuming, and frankly, a bit overwhelming.

Sound familiar? You're not alone in this boat.

Before we dive deeper, let's clarify what we mean by fine-tuning:

Fine-tuning is the process of further training a pre-trained model on a specific task or dataset to adapt its knowledge for a particular application.

The world of AI has been grappling with a significant challenge: how to efficiently fine-tune LLMs without breaking the bank or melting your hardware – we all are GPU poor except NVIDIA. Traditional fine-tuning methods are like using a sledgehammer to crack a nut, – they get the job done, but at what cost?

Enter SLoRA – Sparse Low-Rank Adaptation, – the unsung hero for efficient model fine-tuning.

Fine-tuning large language models (LLMs) has become a crucial step in achieving state-of-the-art results in various natural language processing (NLP) tasks. However, this process often comes with significant computational costs, memory requirements, and time constraints. In this article, we'll explore SLoRA, a novel approach to efficient model fine-tuning that promises to revolutionise the way we work with LLMs. By leveraging sparse low-rank adaptation, SLoRA offers a faster, more cost-effective, and more sustainable way to fine-tune LLMs without sacrificing performance.

What is SLoRA?

To understand SLoRA, it's essential to first grasp the concept of Low-Rank Adaptation (LoRA). LoRA is a parameter-efficient fine-tuning method that updates only a small subset of model parameters while keeping the rest frozen. This approach has shown remarkable success in reducing computational costs and memory requirements for model fine-tuning.

However, LoRA still updates a relatively large number of parameters, which can be computationally expensive and memory-intensive. This is where SLoRA comes in – by introducing sparsity into the LoRA framework, SLoRA further reduces the number of updated parameters to approximately 1% of the original model's parameters. This drastic reduction in updated parameters leads to significant computational savings and faster convergence rates

SLoRA stands for Sparse Low-Rank Adaptation, a method designed to enhance the efficiency of fine-tuning LLMs. It builds on the concept of LoRA, which constrains the update of pre-trained weights using low-rank decomposition. SLoRA introduces sparsity into this approach, focusing only on a subset of parameters that significantly impact the model's performance.

Here's a simple analogy -- Imagine you have a giant jigsaw puzzle. LoRA would focus on updating a specific section of the puzzle, while SLoRA would pinpoint only the most important pieces within that section.

How SLoRA Works

SLoRA employs a sparse matrix approach where the weight updates are constrained to a low-rank format and further sparsified. This involves decomposing the weight matrix into a product of two smaller matrices and applying updates only to a sparse subset of the original parameters. This method reduces the density of updates to about 1%, significantly cutting down on the resources needed for training.

Think of a weight matrix in an LLM as a giant grid of numbers. These numbers represent the connections between different parts of the model. SLoRA uses a technique called sparse matrix decomposition to break down this grid into smaller, more manageable pieces.

Imagine slicing a pizza into smaller triangles. SLoRA only updates the toppings on a select few of those triangles, leaving the rest untouched. This dramatically reduces the amount of data we need to process and store.

Comparison with Traditional Fine-Tuning Methods

Traditional fine-tuning involves updating all model parameters, which is resource-intensive and time-consuming. In contrast, SLoRA updates only a small, significant subset of parameters, achieving similar performance with much lower computational overhead.

SLoRA in the nutshell:

SLoRA builds on the foundation of LoRA (Low-Rank Adaptation), which already constraints updates to a small subset of parameters.

It takes this a step further by introducing sparsity – focusing on an even smaller, more crucial set of parameters.

The result? You're updating only about 1% of the model's parameters, dramatically reducing computational load and memory requirements.

Benefits?

Reduced Computational Requirements: By updating only a sparse subset of parameters, SLoRA dramatically lowers the computational load.

Faster Fine-Tuning Process: The sparse updates enable quicker convergence, speeding up the fine-tuning process.

Lower Memory Usage: The reduced number of updates translates to lower memory requirements, making it feasible to deploy models on devices with limited memory.

Potential for Improved Model Performance: Efficient parameter updates can lead to models that are not only faster but also potentially more robust and adaptable to specific tasks.

How to use SLoRA in Your Projects:

Initialize the Sparse Matrix: Begin by setting up the sparse matrix with low-rank decomposition.

Apply Sparse Updates: Update only the critical parameters as identified by the sparsity constraints.

Train the Model: Proceed with the fine-tuning process, leveraging the computational efficiency of SLoRA.

For more detailed guide checkout this paper: https://arxiv.org/pdf/2308.06522

SLoRA's Secret Sauce:

Here's where SLoRA really flexes its muscles. By dramatically reducing the number of parameters updated during fine-tuning, SLoRA processes fewer tokens. It's like having a car that can drive the same distance using only a fraction of the fuel.

Fewer tokens processed = Lower costs

Efficient updates = More bang for your buck

Optimized token usage = Stretch your budget further

Let's say you're fine-tuning a model on a specific task. Traditional methods might process millions of tokens, updating every parameter. With SLoRA, you're looking at a fraction of that - potentially cutting your token usage (and thus, your costs)!

Conclusion

SLoRA represents a significant leap forward in efficient model fine-tuning. It empowers developers to unlock the full potential of LLMs while being mindful of resources and costs.

Ready to give SLoRA a try? At Tune AI, we implemented this approach to ,ake models mode accessible and provide an efficient way to fine-tune and serve LLMs.

Head over to Tune Studio and start exploring! Share your experiences and let's build a more accessible and sustainable future for AI together.

Written by

Abhishek Mishra

DevRel Engineer

Edited by

Rohan Pooniwala

CTO