LLMs

State Space Models: Falcon Mamba, SLMs Take a Leap

Aug 21, 2024

5 min read

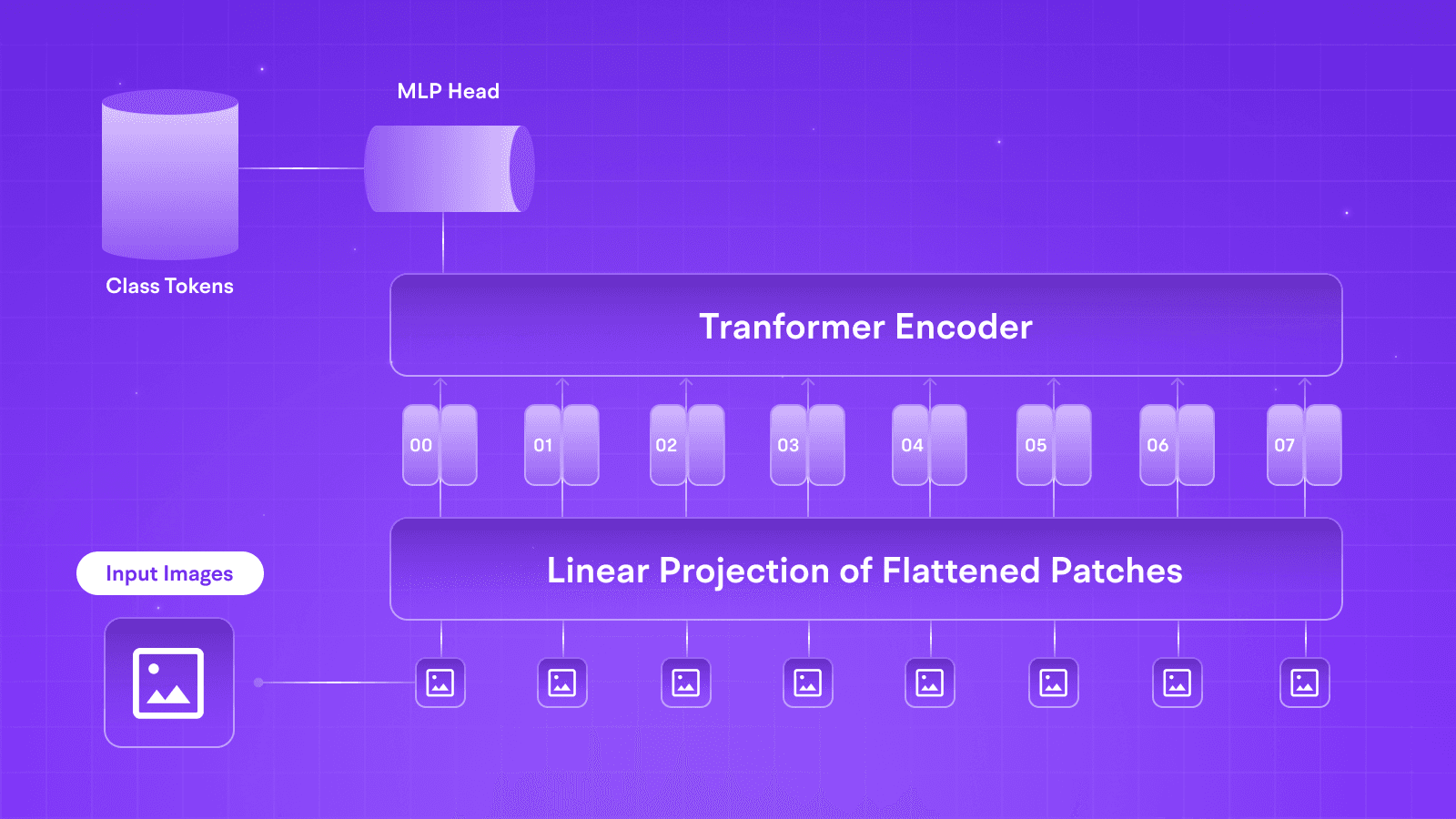

Since their introduction, transformers have proven to be handy and versatile tools for deep learning. With use cases ranging from Computer Vision to NLP, they also hold great importance in the modern Generative AI scene. However, with those varying degrees of functionality, so do the tools and methods complementing this architecture.

In this blog, we look at one such method called State Space Models, a class of models used to describe the evolution of a system over time in terms of internal states. We will explore how this method and its progeny shape some sections of our vast Generative AI scene through TTI’s new model, Falcon Mamba 7B.

State Space Models

State Space Models differ slightly from the most popular transformer models. Take a very long book. Say you are trying to read it and remember what happens in every chapter. Well, transformers try to remember every part of the book at once. Hence, ChatGPT or Llama 3.1 can switch between contexts while building upon your conversation.

Well, what if the book is very, very big? The learning process gets tricky because the Transformer’s inherent nature involves comparing each word with every other word to understand the context. Now, SSMs take a different approach. Instead of remembering everything immediately, they update a "state" as they read. You can think of this "state" as a mental note updated as you read more. So, instead of holding onto every detail from the beginning, SSMs focus on the most critical information that helps them understand the story as they go along.

Here’s where it gets a bit technical:

1. Continuous Update: SSMs update their "state" based on new information. This "state" is like a summary that changes as more text is processed.

2. Handling Long Texts: Because SSMs update their state continuously, they can handle much more extended text (like an entire book) without needing a lot of memory or computing power. This makes them efficient for tasks like translating languages or summarizing long documents.

So, while transformers are powerful, SSMs promise a more innovative way to handle long texts while focusing on what’s important, or so we thought. Traditional SSMs continuously update their state as they process data, which is terrible because they treat all incoming information with equal importance, which can lead to slower inferences. Selective SSMs are a further sub-category that equips SSMs with modules similar to the popular Attention Mechanism, which lets them focus on or ignore certain information.

Mamba: A Selective SSM

Mamba is one of the newer models that builds on the concept of state space models to handle even longer sequences of data, like text, efficiently. This new set of models works by continuously updating an internal “state,” much like traditional SSMs. What makes Mamba unique is its selective attention mechanism.

As the model processes sequences, Mamba can dynamically choose the parts of the input that are more important to the inference and the conversation in general. This selective focus enables the model to manage long texts effectively, similar to transformers, but within the state space framework.

This is achieved through a combination of attention weights and gating mechanisms. Instead of attending to all tokens equally, Mamba computes attention weights to selectively focus on more informative or contextually significant parts of the input sequence. This helps manage long sequences by prioritizing important information and ignoring less relevant data.

Falcon Mamba 7B

Let's look at a model performing with the status quo at equal footing with State Space Modeling: Falcon Mamba 7B. Falcon Mamba 7B, developed by the Technology Innovation Institute, introduces significant improvements in handling longer sequences on the same hardware as some more prominent players.

In performance tests, Falcon Mamba 7B demonstrated impressive capabilities. It outperformed leading transformer models like Meta’s Llama 3 8B and Mistral 7B in handling more extended contexts and maintaining consistent speed during token generation. The model’s architecture allows it to fit more extensive sequences, theoretically accommodating infinite context lengths by processing tokens sequentially or in manageable chunks.

Benchmark results showed Falcon Mamba excelling in several tests, including Arc, TruthfulQA, and GSM8K, where it outperformed or matched the performance of its transformer counterparts. Despite its successes, Falcon Mamba's performance in some benchmarks, such as MMLU and Hellaswag, was slightly behind other models. TII plans to optimize the Falcon Mamba further to enhance its performance and expand its application scope.

Conclusion

State Space Models are a novel technique for large language models. With just a bit of focus, they have proven to be one of the models you could rely on in the pinch of hardware. With a somewhat competitive footing against models from OpenAI and Meta, Falcon Mamba 7B shows an excellent implementation of Selective State Space Models in LLMs.

We invite you to stick around as we release more content on LLM Optimization and technical tutorials, enabling you to take advantage of the wealth of large open-source language models.

Further Reading

Since their introduction, transformers have proven to be handy and versatile tools for deep learning. With use cases ranging from Computer Vision to NLP, they also hold great importance in the modern Generative AI scene. However, with those varying degrees of functionality, so do the tools and methods complementing this architecture.

In this blog, we look at one such method called State Space Models, a class of models used to describe the evolution of a system over time in terms of internal states. We will explore how this method and its progeny shape some sections of our vast Generative AI scene through TTI’s new model, Falcon Mamba 7B.

State Space Models

State Space Models differ slightly from the most popular transformer models. Take a very long book. Say you are trying to read it and remember what happens in every chapter. Well, transformers try to remember every part of the book at once. Hence, ChatGPT or Llama 3.1 can switch between contexts while building upon your conversation.

Well, what if the book is very, very big? The learning process gets tricky because the Transformer’s inherent nature involves comparing each word with every other word to understand the context. Now, SSMs take a different approach. Instead of remembering everything immediately, they update a "state" as they read. You can think of this "state" as a mental note updated as you read more. So, instead of holding onto every detail from the beginning, SSMs focus on the most critical information that helps them understand the story as they go along.

Here’s where it gets a bit technical:

1. Continuous Update: SSMs update their "state" based on new information. This "state" is like a summary that changes as more text is processed.

2. Handling Long Texts: Because SSMs update their state continuously, they can handle much more extended text (like an entire book) without needing a lot of memory or computing power. This makes them efficient for tasks like translating languages or summarizing long documents.

So, while transformers are powerful, SSMs promise a more innovative way to handle long texts while focusing on what’s important, or so we thought. Traditional SSMs continuously update their state as they process data, which is terrible because they treat all incoming information with equal importance, which can lead to slower inferences. Selective SSMs are a further sub-category that equips SSMs with modules similar to the popular Attention Mechanism, which lets them focus on or ignore certain information.

Mamba: A Selective SSM

Mamba is one of the newer models that builds on the concept of state space models to handle even longer sequences of data, like text, efficiently. This new set of models works by continuously updating an internal “state,” much like traditional SSMs. What makes Mamba unique is its selective attention mechanism.

As the model processes sequences, Mamba can dynamically choose the parts of the input that are more important to the inference and the conversation in general. This selective focus enables the model to manage long texts effectively, similar to transformers, but within the state space framework.

This is achieved through a combination of attention weights and gating mechanisms. Instead of attending to all tokens equally, Mamba computes attention weights to selectively focus on more informative or contextually significant parts of the input sequence. This helps manage long sequences by prioritizing important information and ignoring less relevant data.

Falcon Mamba 7B

Let's look at a model performing with the status quo at equal footing with State Space Modeling: Falcon Mamba 7B. Falcon Mamba 7B, developed by the Technology Innovation Institute, introduces significant improvements in handling longer sequences on the same hardware as some more prominent players.

In performance tests, Falcon Mamba 7B demonstrated impressive capabilities. It outperformed leading transformer models like Meta’s Llama 3 8B and Mistral 7B in handling more extended contexts and maintaining consistent speed during token generation. The model’s architecture allows it to fit more extensive sequences, theoretically accommodating infinite context lengths by processing tokens sequentially or in manageable chunks.

Benchmark results showed Falcon Mamba excelling in several tests, including Arc, TruthfulQA, and GSM8K, where it outperformed or matched the performance of its transformer counterparts. Despite its successes, Falcon Mamba's performance in some benchmarks, such as MMLU and Hellaswag, was slightly behind other models. TII plans to optimize the Falcon Mamba further to enhance its performance and expand its application scope.

Conclusion

State Space Models are a novel technique for large language models. With just a bit of focus, they have proven to be one of the models you could rely on in the pinch of hardware. With a somewhat competitive footing against models from OpenAI and Meta, Falcon Mamba 7B shows an excellent implementation of Selective State Space Models in LLMs.

We invite you to stick around as we release more content on LLM Optimization and technical tutorials, enabling you to take advantage of the wealth of large open-source language models.

Further Reading

Since their introduction, transformers have proven to be handy and versatile tools for deep learning. With use cases ranging from Computer Vision to NLP, they also hold great importance in the modern Generative AI scene. However, with those varying degrees of functionality, so do the tools and methods complementing this architecture.

In this blog, we look at one such method called State Space Models, a class of models used to describe the evolution of a system over time in terms of internal states. We will explore how this method and its progeny shape some sections of our vast Generative AI scene through TTI’s new model, Falcon Mamba 7B.

State Space Models

State Space Models differ slightly from the most popular transformer models. Take a very long book. Say you are trying to read it and remember what happens in every chapter. Well, transformers try to remember every part of the book at once. Hence, ChatGPT or Llama 3.1 can switch between contexts while building upon your conversation.

Well, what if the book is very, very big? The learning process gets tricky because the Transformer’s inherent nature involves comparing each word with every other word to understand the context. Now, SSMs take a different approach. Instead of remembering everything immediately, they update a "state" as they read. You can think of this "state" as a mental note updated as you read more. So, instead of holding onto every detail from the beginning, SSMs focus on the most critical information that helps them understand the story as they go along.

Here’s where it gets a bit technical:

1. Continuous Update: SSMs update their "state" based on new information. This "state" is like a summary that changes as more text is processed.

2. Handling Long Texts: Because SSMs update their state continuously, they can handle much more extended text (like an entire book) without needing a lot of memory or computing power. This makes them efficient for tasks like translating languages or summarizing long documents.

So, while transformers are powerful, SSMs promise a more innovative way to handle long texts while focusing on what’s important, or so we thought. Traditional SSMs continuously update their state as they process data, which is terrible because they treat all incoming information with equal importance, which can lead to slower inferences. Selective SSMs are a further sub-category that equips SSMs with modules similar to the popular Attention Mechanism, which lets them focus on or ignore certain information.

Mamba: A Selective SSM

Mamba is one of the newer models that builds on the concept of state space models to handle even longer sequences of data, like text, efficiently. This new set of models works by continuously updating an internal “state,” much like traditional SSMs. What makes Mamba unique is its selective attention mechanism.

As the model processes sequences, Mamba can dynamically choose the parts of the input that are more important to the inference and the conversation in general. This selective focus enables the model to manage long texts effectively, similar to transformers, but within the state space framework.

This is achieved through a combination of attention weights and gating mechanisms. Instead of attending to all tokens equally, Mamba computes attention weights to selectively focus on more informative or contextually significant parts of the input sequence. This helps manage long sequences by prioritizing important information and ignoring less relevant data.

Falcon Mamba 7B

Let's look at a model performing with the status quo at equal footing with State Space Modeling: Falcon Mamba 7B. Falcon Mamba 7B, developed by the Technology Innovation Institute, introduces significant improvements in handling longer sequences on the same hardware as some more prominent players.

In performance tests, Falcon Mamba 7B demonstrated impressive capabilities. It outperformed leading transformer models like Meta’s Llama 3 8B and Mistral 7B in handling more extended contexts and maintaining consistent speed during token generation. The model’s architecture allows it to fit more extensive sequences, theoretically accommodating infinite context lengths by processing tokens sequentially or in manageable chunks.

Benchmark results showed Falcon Mamba excelling in several tests, including Arc, TruthfulQA, and GSM8K, where it outperformed or matched the performance of its transformer counterparts. Despite its successes, Falcon Mamba's performance in some benchmarks, such as MMLU and Hellaswag, was slightly behind other models. TII plans to optimize the Falcon Mamba further to enhance its performance and expand its application scope.

Conclusion

State Space Models are a novel technique for large language models. With just a bit of focus, they have proven to be one of the models you could rely on in the pinch of hardware. With a somewhat competitive footing against models from OpenAI and Meta, Falcon Mamba 7B shows an excellent implementation of Selective State Space Models in LLMs.

We invite you to stick around as we release more content on LLM Optimization and technical tutorials, enabling you to take advantage of the wealth of large open-source language models.

Further Reading

Written by

Aryan Kargwal

Data Evangelist