Announcements

Tune AI Integrates Meta’s Latest Llama 3.1

Jul 23, 2024

2 min read

We're excited to share that Meta has just unveiled Llama 3.1, and at Tune AI, we've made them available on our platform within an hour of its release!

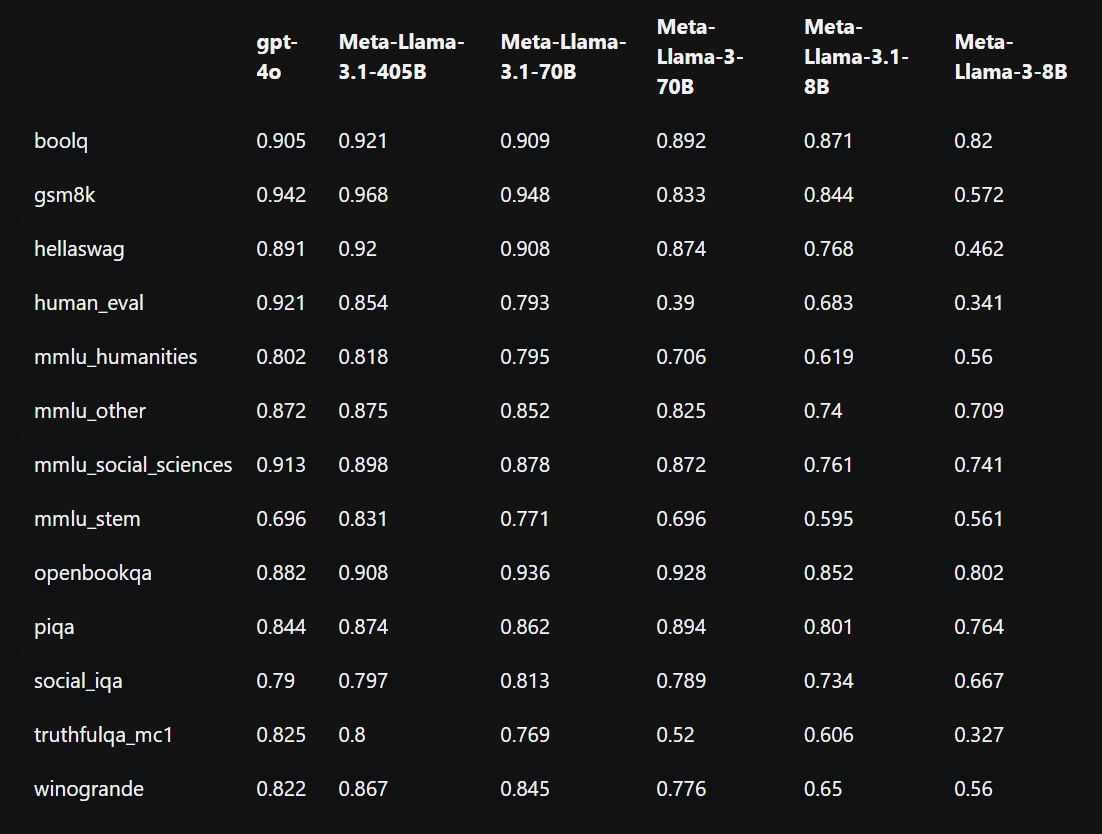

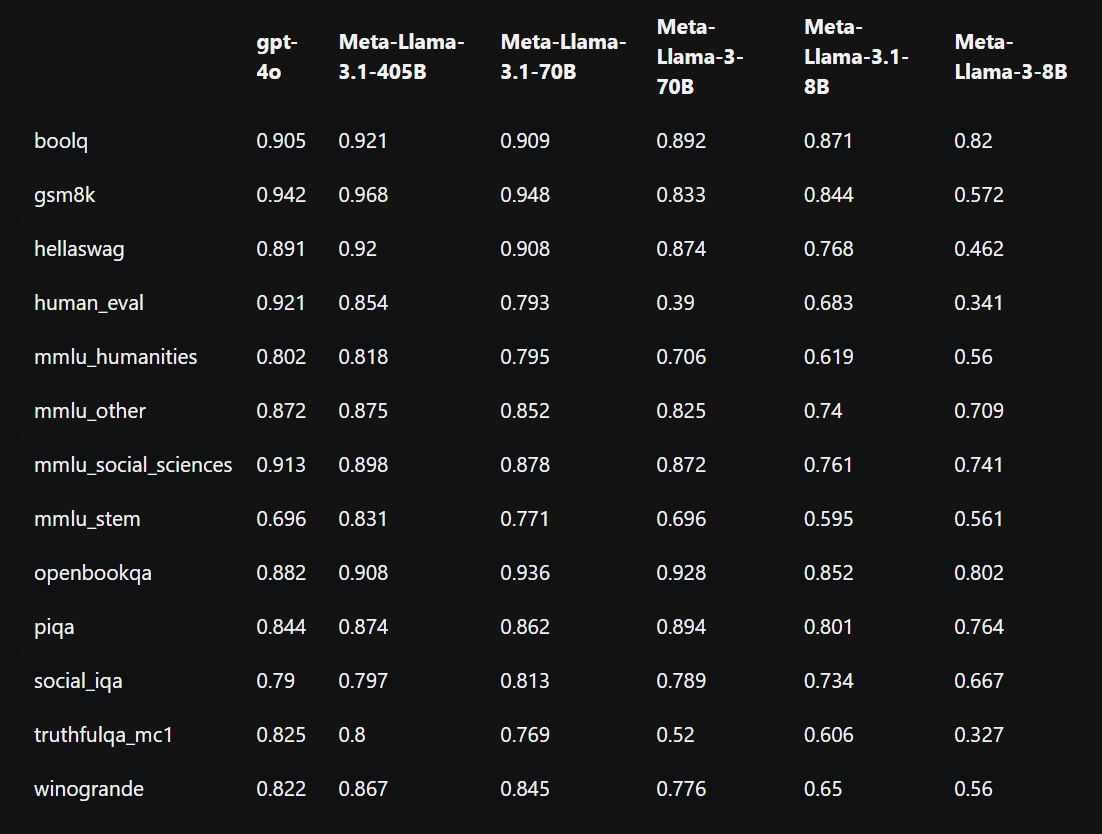

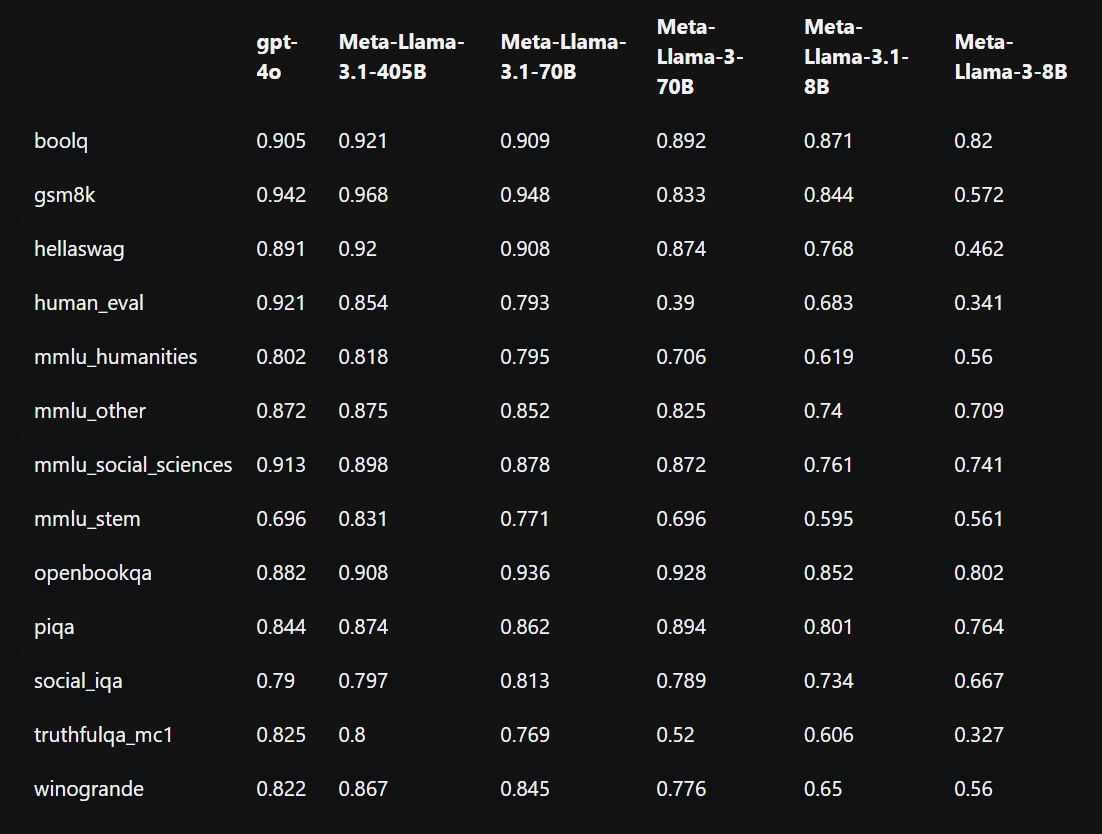

The 8B, 70B , and 405B parameter models of Llama 3.1 not only bring state-of-the-art performance but also outperform other leading models. Llama 3.1 405B beats GPT-4 on most metrics.

There is a comprehensive model card and benchmarking information on the meta-llama/llama-models github repository.

We believe in Meta’s vision that open-source AI is the path forward. You can now access Llama 3.1 directly through Tune Chat and Tune Studio.

Getting Started with Tune Studio

Step 1: Sign Up

Sign up for Tune Studio to unlock access to Llama 3.1.

Using Llama 3.1 via UI and APIs

Step 2: Access the Playground

Once signed in, navigate to the playground. Select the chat option and pick the desired Llama 3.1 model to experiment with.

Step 3: Get the API Code

On the right-hand side of the playground screen, you’ll find the “Get Code” button. Click it to access the Llama 3.1 API. The starter code is available in multiple languages, making it easy to integrate into your projects.

Creating Datasets for Fine-Tuning

Step 4: Create a New Dataset

Go to the datasets menu and create a new dataset by providing a name for your dataset.

Step 5: Generate Conversations

Return to the playground, select “threads” from the dropdown menu, and choose the dataset you just created. Pick the Llama 3.1 model and start generating conversations.

Step 6: Edit and Save Responses

If the responses aren’t perfect, no worries! You can edit them right there. Once you’re satisfied with the responses, you can download your dataset or use it to fine-tune your model directly within Tune Studio.

Tune Studio offers the flexibility to work both on the cloud and on-premises, ensuring you can tailor the environment to your needs. Get started with Tune Studio now and experience the cutting-edge capabilities of Llama 3.1.

We're excited to share that Meta has just unveiled Llama 3.1, and at Tune AI, we've made them available on our platform within an hour of its release!

The 8B, 70B , and 405B parameter models of Llama 3.1 not only bring state-of-the-art performance but also outperform other leading models. Llama 3.1 405B beats GPT-4 on most metrics.

There is a comprehensive model card and benchmarking information on the meta-llama/llama-models github repository.

We believe in Meta’s vision that open-source AI is the path forward. You can now access Llama 3.1 directly through Tune Chat and Tune Studio.

Getting Started with Tune Studio

Step 1: Sign Up

Sign up for Tune Studio to unlock access to Llama 3.1.

Using Llama 3.1 via UI and APIs

Step 2: Access the Playground

Once signed in, navigate to the playground. Select the chat option and pick the desired Llama 3.1 model to experiment with.

Step 3: Get the API Code

On the right-hand side of the playground screen, you’ll find the “Get Code” button. Click it to access the Llama 3.1 API. The starter code is available in multiple languages, making it easy to integrate into your projects.

Creating Datasets for Fine-Tuning

Step 4: Create a New Dataset

Go to the datasets menu and create a new dataset by providing a name for your dataset.

Step 5: Generate Conversations

Return to the playground, select “threads” from the dropdown menu, and choose the dataset you just created. Pick the Llama 3.1 model and start generating conversations.

Step 6: Edit and Save Responses

If the responses aren’t perfect, no worries! You can edit them right there. Once you’re satisfied with the responses, you can download your dataset or use it to fine-tune your model directly within Tune Studio.

Tune Studio offers the flexibility to work both on the cloud and on-premises, ensuring you can tailor the environment to your needs. Get started with Tune Studio now and experience the cutting-edge capabilities of Llama 3.1.

We're excited to share that Meta has just unveiled Llama 3.1, and at Tune AI, we've made them available on our platform within an hour of its release!

The 8B, 70B , and 405B parameter models of Llama 3.1 not only bring state-of-the-art performance but also outperform other leading models. Llama 3.1 405B beats GPT-4 on most metrics.

There is a comprehensive model card and benchmarking information on the meta-llama/llama-models github repository.

We believe in Meta’s vision that open-source AI is the path forward. You can now access Llama 3.1 directly through Tune Chat and Tune Studio.

Getting Started with Tune Studio

Step 1: Sign Up

Sign up for Tune Studio to unlock access to Llama 3.1.

Using Llama 3.1 via UI and APIs

Step 2: Access the Playground

Once signed in, navigate to the playground. Select the chat option and pick the desired Llama 3.1 model to experiment with.

Step 3: Get the API Code

On the right-hand side of the playground screen, you’ll find the “Get Code” button. Click it to access the Llama 3.1 API. The starter code is available in multiple languages, making it easy to integrate into your projects.

Creating Datasets for Fine-Tuning

Step 4: Create a New Dataset

Go to the datasets menu and create a new dataset by providing a name for your dataset.

Step 5: Generate Conversations

Return to the playground, select “threads” from the dropdown menu, and choose the dataset you just created. Pick the Llama 3.1 model and start generating conversations.

Step 6: Edit and Save Responses

If the responses aren’t perfect, no worries! You can edit them right there. Once you’re satisfied with the responses, you can download your dataset or use it to fine-tune your model directly within Tune Studio.

Tune Studio offers the flexibility to work both on the cloud and on-premises, ensuring you can tailor the environment to your needs. Get started with Tune Studio now and experience the cutting-edge capabilities of Llama 3.1.

Written by

Chandrani Halder

Head of Product