LLMs

Integrating Knowledge Graphs into LLMs: A Comprehensive Guide

May 30, 2024

5 min read

Retrieval Augmented Generation has been the buzz of the town lately, with more and more enterprise LLMs running on the technique. Still, in practice, avid users are bound to notice their struggle while answering multi-part questions involving connecting pieces of information. Here comes Knowledge Graphs, your tool in the arsenal against faulty LLMs.

In the following blog, we will try to understand Knowledge Graphs and their intricate relationships between data points and LLM nodes to improve our performance.

Understanding Knowledge Graphs

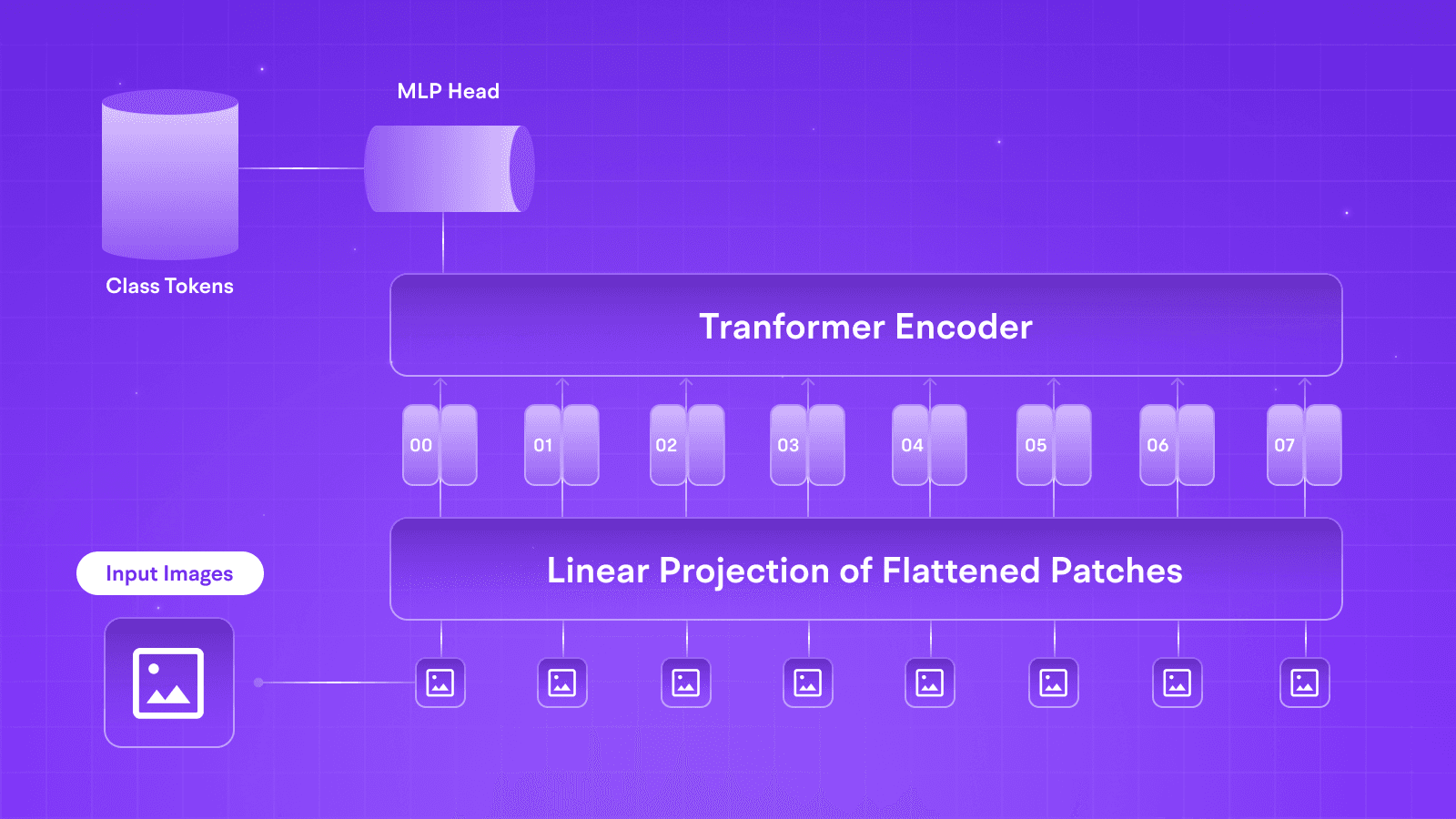

In the general workings of large language models, specific language models try to condense information at source and use that information further in their query-based operations, as the information to work with is much more concise, there is less noise, and there is less prompt token space.

Knowledge graphs work on this assumption and can be imagined as an intricate network of real-world entities such as Objects, Subjects, Events, Situations, etc., all taken from the dataset and mapped out as relationships between them.

Knowledge Graphs achieve this intricate mapping, which is not only cheaper than using RAGs for Information/Relationship Retrieval through expressivity but also uses the standards of Semantic Web Stack, such as RDF (Resource Description Framework), which allows for a seamless representation of various types of data and content.

This framework also allows developers to integrate and utilize data serialization techniques, making the process more mechanical than running on stochastic Large Language Models.

Strategies for Integrating Knowledge Graphs in LLMs

Knowledge Graphs can come in three forms, which can be utilized to empower LLMs to a new standard:

Encyclopedic Knowledge Graphs: Knowledge Graphs representing General Knowledge from the real world. These graphs use large databases such as Wikidata, Freebase, DBPedia, and YAGO.

Commonsense Knowledge Graphs: Knowledge Graphs containing knowledge about daily concepts, which is more tactical than general Encyclopedic Knowledge Graphs. Some examples of this are TransOMCS and CausalBanK.

Domain-Specific Knowledge Graphs are domain-specific knowledge graphs that represent a particular niche topic. Some examples are UMLS, Knowledge Graph for the Medical Domain.

After understanding Knowledge Graphs, we will discuss their applications in large language models and how to make their LLMs smarter using KGs.

Due to their inherent lack of driving relationships and conclusions from a corpus of data, LLMs can be enhanced multi-fold by introducing KG-enhanced pipelines.

Pre-Training: LLMs can be augmented with Knowledge Graph embeddings to enrich their understanding of relationships between different entities in the data corpus.

Inference: During inference, LLMs can directly inject information from the KG to improve the relevance and accuracy of the LLM inferences.

Interpretability: KG Integration allows one to map out the relevant sources for an unavoidable inference by justifying a particular answer to a prompt.

Knowledge Graphs address several governance issues that arise with the rise of unchecked data sources and citing in popular large language models such as GPT and Anthropic. These models, however flashy and quick their inferences are, always need to be taken with a grain of salt. Closed-source models trained on older data with no real connections to the state and workings of the current world are incapable of even talking about themselves, let alone helping with Literature Reviews of your College Project.

Future Directions and Challenges

Although real-life examples of Knowledge Graph and Large Language Model integration are still developing, we are starting to see the beginnings of their long-upcoming collaborations in the form of more diverse use of the data rather than throwing a tonne of web pages from Reddit to fine-tune a model.

Some possible avenues for research enthusiasts are going to the following:

KGs for Hallucination Detection in LLMs: As mentioned earlier, due to their ability to aid in pre-training and then dynamically correct the knowledge relationships, KGs can be used to reduce Hallucinations in LLMs a lot with correct information serialization, which can be tracked down to the faulty data coming up with wrong inferences.

The synergy between the two for Bidirectional Reasoning: KGs and LLMs are synchronous pieces of a pipeline that have been seen to complement one another. A desired future application would be to create channels between the two using advanced RAG applications to create bidirectional reasoning to overcome individual shortcomings.

Future References

For the keener mind, we have compiled a list of Knowledge Graph Libraries and Integration Libraries you can use to get started with Knowledge Graphs.

Library

RDFLib - Knowledge Graph Library

Stardog - Knowledge Graph Library

Neo4j- Knowledge Graph Library

GraphDB - Knowledge Graph Library

KG-Bert - Knowledge Graph Integration Library

FactCC - Knowledge Graph Integration Library

Conclusion

As we progress towards slower data ingestion pipelines and an ever-growing need for data for hungry large language models, we must devise new strategies for dealing with data. Knowledge Graphs, due to their inherent working with RAGs, serve as a promising step towards this.

Let us keep working on these existing and potential research topics to establish a bidirectional reasoning trust between data and models. In this blog, we covered these topics, and we invite you to follow us as we explore more such technical concepts that can boost LLM Pipelines no matter the application.

Retrieval Augmented Generation has been the buzz of the town lately, with more and more enterprise LLMs running on the technique. Still, in practice, avid users are bound to notice their struggle while answering multi-part questions involving connecting pieces of information. Here comes Knowledge Graphs, your tool in the arsenal against faulty LLMs.

In the following blog, we will try to understand Knowledge Graphs and their intricate relationships between data points and LLM nodes to improve our performance.

Understanding Knowledge Graphs

In the general workings of large language models, specific language models try to condense information at source and use that information further in their query-based operations, as the information to work with is much more concise, there is less noise, and there is less prompt token space.

Knowledge graphs work on this assumption and can be imagined as an intricate network of real-world entities such as Objects, Subjects, Events, Situations, etc., all taken from the dataset and mapped out as relationships between them.

Knowledge Graphs achieve this intricate mapping, which is not only cheaper than using RAGs for Information/Relationship Retrieval through expressivity but also uses the standards of Semantic Web Stack, such as RDF (Resource Description Framework), which allows for a seamless representation of various types of data and content.

This framework also allows developers to integrate and utilize data serialization techniques, making the process more mechanical than running on stochastic Large Language Models.

Strategies for Integrating Knowledge Graphs in LLMs

Knowledge Graphs can come in three forms, which can be utilized to empower LLMs to a new standard:

Encyclopedic Knowledge Graphs: Knowledge Graphs representing General Knowledge from the real world. These graphs use large databases such as Wikidata, Freebase, DBPedia, and YAGO.

Commonsense Knowledge Graphs: Knowledge Graphs containing knowledge about daily concepts, which is more tactical than general Encyclopedic Knowledge Graphs. Some examples of this are TransOMCS and CausalBanK.

Domain-Specific Knowledge Graphs are domain-specific knowledge graphs that represent a particular niche topic. Some examples are UMLS, Knowledge Graph for the Medical Domain.

After understanding Knowledge Graphs, we will discuss their applications in large language models and how to make their LLMs smarter using KGs.

Due to their inherent lack of driving relationships and conclusions from a corpus of data, LLMs can be enhanced multi-fold by introducing KG-enhanced pipelines.

Pre-Training: LLMs can be augmented with Knowledge Graph embeddings to enrich their understanding of relationships between different entities in the data corpus.

Inference: During inference, LLMs can directly inject information from the KG to improve the relevance and accuracy of the LLM inferences.

Interpretability: KG Integration allows one to map out the relevant sources for an unavoidable inference by justifying a particular answer to a prompt.

Knowledge Graphs address several governance issues that arise with the rise of unchecked data sources and citing in popular large language models such as GPT and Anthropic. These models, however flashy and quick their inferences are, always need to be taken with a grain of salt. Closed-source models trained on older data with no real connections to the state and workings of the current world are incapable of even talking about themselves, let alone helping with Literature Reviews of your College Project.

Future Directions and Challenges

Although real-life examples of Knowledge Graph and Large Language Model integration are still developing, we are starting to see the beginnings of their long-upcoming collaborations in the form of more diverse use of the data rather than throwing a tonne of web pages from Reddit to fine-tune a model.

Some possible avenues for research enthusiasts are going to the following:

KGs for Hallucination Detection in LLMs: As mentioned earlier, due to their ability to aid in pre-training and then dynamically correct the knowledge relationships, KGs can be used to reduce Hallucinations in LLMs a lot with correct information serialization, which can be tracked down to the faulty data coming up with wrong inferences.

The synergy between the two for Bidirectional Reasoning: KGs and LLMs are synchronous pieces of a pipeline that have been seen to complement one another. A desired future application would be to create channels between the two using advanced RAG applications to create bidirectional reasoning to overcome individual shortcomings.

Future References

For the keener mind, we have compiled a list of Knowledge Graph Libraries and Integration Libraries you can use to get started with Knowledge Graphs.

Library

RDFLib - Knowledge Graph Library

Stardog - Knowledge Graph Library

Neo4j- Knowledge Graph Library

GraphDB - Knowledge Graph Library

KG-Bert - Knowledge Graph Integration Library

FactCC - Knowledge Graph Integration Library

Conclusion

As we progress towards slower data ingestion pipelines and an ever-growing need for data for hungry large language models, we must devise new strategies for dealing with data. Knowledge Graphs, due to their inherent working with RAGs, serve as a promising step towards this.

Let us keep working on these existing and potential research topics to establish a bidirectional reasoning trust between data and models. In this blog, we covered these topics, and we invite you to follow us as we explore more such technical concepts that can boost LLM Pipelines no matter the application.

Retrieval Augmented Generation has been the buzz of the town lately, with more and more enterprise LLMs running on the technique. Still, in practice, avid users are bound to notice their struggle while answering multi-part questions involving connecting pieces of information. Here comes Knowledge Graphs, your tool in the arsenal against faulty LLMs.

In the following blog, we will try to understand Knowledge Graphs and their intricate relationships between data points and LLM nodes to improve our performance.

Understanding Knowledge Graphs

In the general workings of large language models, specific language models try to condense information at source and use that information further in their query-based operations, as the information to work with is much more concise, there is less noise, and there is less prompt token space.

Knowledge graphs work on this assumption and can be imagined as an intricate network of real-world entities such as Objects, Subjects, Events, Situations, etc., all taken from the dataset and mapped out as relationships between them.

Knowledge Graphs achieve this intricate mapping, which is not only cheaper than using RAGs for Information/Relationship Retrieval through expressivity but also uses the standards of Semantic Web Stack, such as RDF (Resource Description Framework), which allows for a seamless representation of various types of data and content.

This framework also allows developers to integrate and utilize data serialization techniques, making the process more mechanical than running on stochastic Large Language Models.

Strategies for Integrating Knowledge Graphs in LLMs

Knowledge Graphs can come in three forms, which can be utilized to empower LLMs to a new standard:

Encyclopedic Knowledge Graphs: Knowledge Graphs representing General Knowledge from the real world. These graphs use large databases such as Wikidata, Freebase, DBPedia, and YAGO.

Commonsense Knowledge Graphs: Knowledge Graphs containing knowledge about daily concepts, which is more tactical than general Encyclopedic Knowledge Graphs. Some examples of this are TransOMCS and CausalBanK.

Domain-Specific Knowledge Graphs are domain-specific knowledge graphs that represent a particular niche topic. Some examples are UMLS, Knowledge Graph for the Medical Domain.

After understanding Knowledge Graphs, we will discuss their applications in large language models and how to make their LLMs smarter using KGs.

Due to their inherent lack of driving relationships and conclusions from a corpus of data, LLMs can be enhanced multi-fold by introducing KG-enhanced pipelines.

Pre-Training: LLMs can be augmented with Knowledge Graph embeddings to enrich their understanding of relationships between different entities in the data corpus.

Inference: During inference, LLMs can directly inject information from the KG to improve the relevance and accuracy of the LLM inferences.

Interpretability: KG Integration allows one to map out the relevant sources for an unavoidable inference by justifying a particular answer to a prompt.

Knowledge Graphs address several governance issues that arise with the rise of unchecked data sources and citing in popular large language models such as GPT and Anthropic. These models, however flashy and quick their inferences are, always need to be taken with a grain of salt. Closed-source models trained on older data with no real connections to the state and workings of the current world are incapable of even talking about themselves, let alone helping with Literature Reviews of your College Project.

Future Directions and Challenges

Although real-life examples of Knowledge Graph and Large Language Model integration are still developing, we are starting to see the beginnings of their long-upcoming collaborations in the form of more diverse use of the data rather than throwing a tonne of web pages from Reddit to fine-tune a model.

Some possible avenues for research enthusiasts are going to the following:

KGs for Hallucination Detection in LLMs: As mentioned earlier, due to their ability to aid in pre-training and then dynamically correct the knowledge relationships, KGs can be used to reduce Hallucinations in LLMs a lot with correct information serialization, which can be tracked down to the faulty data coming up with wrong inferences.

The synergy between the two for Bidirectional Reasoning: KGs and LLMs are synchronous pieces of a pipeline that have been seen to complement one another. A desired future application would be to create channels between the two using advanced RAG applications to create bidirectional reasoning to overcome individual shortcomings.

Future References

For the keener mind, we have compiled a list of Knowledge Graph Libraries and Integration Libraries you can use to get started with Knowledge Graphs.

Library

RDFLib - Knowledge Graph Library

Stardog - Knowledge Graph Library

Neo4j- Knowledge Graph Library

GraphDB - Knowledge Graph Library

KG-Bert - Knowledge Graph Integration Library

FactCC - Knowledge Graph Integration Library

Conclusion

As we progress towards slower data ingestion pipelines and an ever-growing need for data for hungry large language models, we must devise new strategies for dealing with data. Knowledge Graphs, due to their inherent working with RAGs, serve as a promising step towards this.

Let us keep working on these existing and potential research topics to establish a bidirectional reasoning trust between data and models. In this blog, we covered these topics, and we invite you to follow us as we explore more such technical concepts that can boost LLM Pipelines no matter the application.

Written by

Aryan Kargwal

Data Evangelist

Edited by

Abhishek Mishra

DevRel Engineer